Examples of Amazon S3 bucket policies

With Amazon S3 bucket policies, you can secure access to objects in your buckets, so that only users with the appropriate permissions can access them. You can even prevent authenticated users without the appropriate permissions from accessing your Amazon S3 resources.

This section presents examples of typical use cases for bucket policies. These sample

policies use amzn-s3-demo-bucketuser input placeholders

To grant or deny permissions to a set of objects, you can use wildcard characters

(*) in Amazon Resource Names (ARNs) and other values. For example, you can

control access to groups of objects that begin with a common prefix or end with a specific

extension, such as .html.

For more information about Amazon Identity and Access Management (IAM) policy language, see Policies and permissions in Amazon S3.

For more information about the permissions to S3 API operations by S3 resource types, see Required permissions for Amazon S3 API operations.

Note

When testing permissions by using the Amazon S3 console, you must grant additional permissions

that the console requires—s3:ListAllMyBuckets,

s3:GetBucketLocation, and s3:ListBucket. For an example

walkthrough that grants permissions to users and tests those permissions by using the console,

see Controlling access to a bucket with user policies.

Additional resources for creating bucket policies include the following:

-

For a list of the IAM policy actions, resources, and condition keys that you can use when creating a bucket policy, see Actions, resources, and condition keys for Amazon S3 in the Service Authorization Reference.

-

For more information about the permissions to S3 API operations by S3 resource types, see Required permissions for Amazon S3 API operations.

-

For guidance on creating your S3 policy, see Adding a bucket policy by using the Amazon S3 console.

-

To troubleshoot errors with a policy, see Troubleshoot access denied (403 Forbidden) errors in Amazon S3.

If you're having trouble adding or updating a policy, see Why do I get the error "Invalid principal in policy" when I try to update my Amazon S3 bucket policy?

Granting read-only permission to a public anonymous user

You can use your policy settings to grant access to public anonymous users, which is

useful if you're configuring your bucket as a static website. Granting access to public

anonymous users requires you to disable the Block Public Access settings for your bucket. For

more information about how to do this, and the policy required, see Setting permissions for website

access. To

learn how to set up more restrictive policies for the same purpose, see How can I grant

public read access to some objects in my Amazon S3 bucket?

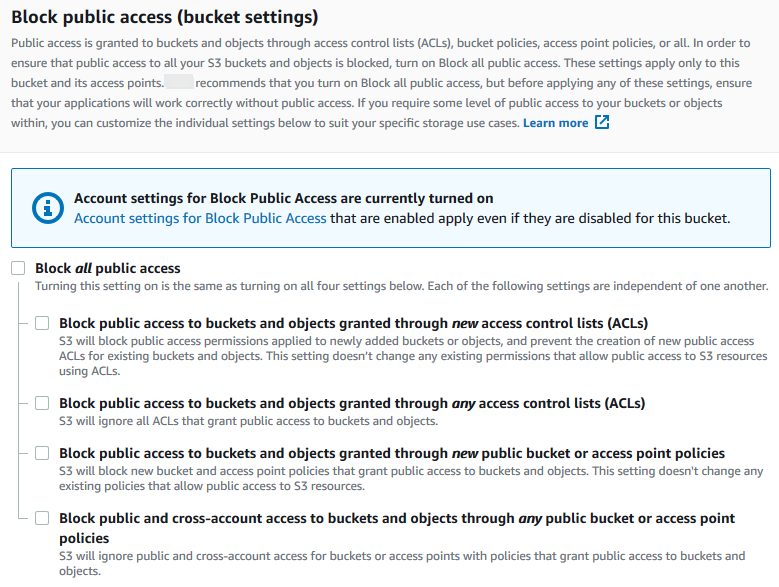

By default, Amazon S3 blocks public access to your account and buckets. If you want to use a bucket to host a static website, you can use these steps to edit your block public access settings.

Warning

Before you complete these steps, review Blocking public access to your Amazon S3 storage to ensure that you understand and accept the risks involved with allowing public access. When you turn off block public access settings to make your bucket public, anyone on the internet can access your bucket. We recommend that you block all public access to your buckets.

-

Open the Amazon S3 console at https://console.amazonaws.cn/s3/

. -

Choose the name of the bucket that you have configured as a static website.

-

Choose Permissions.

-

Under Block public access (bucket settings), choose Edit.

-

Clear Block all public access, and choose Save changes.

Amazon S3 turns off the Block Public Access settings for your bucket. To create a public static website, you might also have to edit the Block Public Access settings for your account before adding a bucket policy. If the Block Public Access settings for your account are currently turned on, you see a note under Block public access (bucket settings).

Requiring encryption

You can require server-side encryption with Amazon Key Management Service (Amazon KMS) keys (SSE-KMS), as shown in the following examples.

Require SSE-KMS for all objects written to a bucket

The following example policy requires every object that is written to the bucket to be encrypted with server-side encryption using Amazon Key Management Service (Amazon KMS) keys (SSE-KMS). If the object isn't encrypted with SSE-KMS, the request is denied.

Require SSE-KMS with a specific Amazon KMS key for all objects written to a bucket

The following example policy denies any objects from being written to the bucket if they aren’t encrypted with SSE-KMS by using a specific KMS key ID. Even if the objects are encrypted with SSE-KMS by using a per-request header or bucket default encryption, the objects can't be written to the bucket if they haven't been encrypted with the specified KMS key. Make sure to replace the KMS key ARN that's used in this example with your own KMS key ARN.

Managing buckets using canned ACLs

Granting permissions to multiple accounts to upload objects or set object ACLs for public access

The following example policy grants the s3:PutObject and

s3:PutObjectAcl permissions to multiple Amazon Web Services accounts. Also, the example

policy requires that any requests for these operations must include the

public-read

canned access control list (ACL). For more information,

see Policy actions

for Amazon S3 and Policy

condition keys for Amazon S3.

Warning

The public-read canned ACL allows anyone in the world to view the objects

in your bucket. Use caution when granting anonymous access to your Amazon S3 bucket or

disabling block public access settings. When you grant anonymous access, anyone in the

world can access your bucket. We recommend that you never grant anonymous access to your

Amazon S3 bucket unless you specifically need to, such as with static website hosting. If you want to enable block public access settings for

static website hosting, see Tutorial: Configuring a

static website on Amazon S3.

Grant cross-account permissions to upload objects while ensuring that the bucket owner has full control

The following example shows how to allow another Amazon Web Services account to upload objects to your

bucket while ensuring that you have full control of the uploaded objects. This policy grants

a specific Amazon Web Services account (111122223333bucket-owner-full-control canned ACL on upload. The StringEquals

condition in the policy specifies the s3:x-amz-acl condition key to express the

canned ACL requirement. For more information, see Policy

condition keys for Amazon S3.

Managing object access with object tagging

Allow a user to read only objects that have a specific tag key and value

The following permissions policy limits a user to only reading objects that have the

environment: production tag key and value. This policy uses the

s3:ExistingObjectTag condition key to specify the tag key and value.

Restrict which object tag keys that users can add

The following example policy grants a user permission to perform the

s3:PutObjectTagging action, which allows a user to add tags to an existing

object. The condition uses the s3:RequestObjectTagKeys condition key to specify

the allowed tag keys, such as Owner or CreationDate. For more

information, see Creating a

condition that tests multiple key values in the IAM User Guide.

The policy ensures that every tag key specified in the request is an authorized tag key.

The ForAnyValue qualifier in the condition ensures that at least one of the

specified keys must be present in the request.

Require a specific tag key and value when allowing users to add object tags

The following example policy grants a user permission to perform the

s3:PutObjectTagging action, which allows a user to add tags to an existing

object. The condition requires the user to include a specific tag key (such as

ProjectX

Allow a user to only add objects with a specific object tag key and value

The following example policy grants a user permission to perform the

s3:PutObject action so that they can add objects to a bucket. However, the

Condition statement restricts the tag keys and values that are allowed on the

uploaded objects. In this example, the user can only add objects that have the specific tag

key (DepartmentFinance

Managing object access by using global condition keys

Global condition

keys are condition context keys with an aws prefix. Amazon Web Services services can

support global condition keys or service-specific keys that include the service prefix. You

can use the Condition element of a JSON policy to compare the keys in a request

with the key values that you specify in your policy.

Restrict access to only Amazon S3 server access log deliveries

In the following example bucket policy, the aws:SourceArn global condition key is used to compare the Amazon Resource

Name (ARN) of the resource, making a service-to-service request with the ARN that

is specified in the policy. The aws:SourceArn global condition key is used to

prevent the Amazon S3 service from being used as a confused deputy during

transactions between services. Only the Amazon S3 service is allowed to add objects to the Amazon S3

bucket.

This example bucket policy grants s3:PutObject permissions to only the

logging service principal (logging.s3.amazonaws.com).

Allow access to only your organization

If you want to require all IAM

principals accessing a resource to be from an Amazon Web Services account in your organization

(including the Amazon Organizations management account), you can use the aws:PrincipalOrgID

global condition key.

To grant or restrict this type of access, define the aws:PrincipalOrgID

condition and set the value to your organization ID

in the bucket policy. The organization ID is used to control access to the bucket. When you

use the aws:PrincipalOrgID condition, the permissions from the bucket policy

are also applied to all new accounts that are added to the organization.

Here’s an example of a resource-based bucket policy that you can use to grant specific

IAM principals in your organization direct access to your bucket. By adding the

aws:PrincipalOrgID global condition key to your bucket policy, the principal

account is now required to be in your organization to obtain access to the resource. Even if

you accidentally specify an incorrect account when granting access, the aws:PrincipalOrgID global condition key acts as an additional

safeguard. When this global key is used in a policy, it prevents all principals from outside

of the specified organization from accessing the S3 bucket. Only principals from accounts in

the listed organization are able to obtain access to the resource.

Managing access based on HTTP or HTTPS requests

Restrict access to only HTTPS requests

If you want to prevent potential attackers from manipulating network traffic, you can

use HTTPS (TLS) to only allow encrypted connections while restricting HTTP requests from

accessing your bucket. To determine whether the request is HTTP or HTTPS, use the aws:SecureTransport global condition key in your S3 bucket

policy. The aws:SecureTransport condition key checks whether a request was sent

by using HTTP.

If a request returns true, then the request was sent through HTTPS. If the

request returns false, then the request was sent through HTTP. You can then

allow or deny access to your bucket based on the desired request scheme.

In the following example, the bucket policy explicitly denies HTTP requests.

Restrict access to a specific HTTP referer

Suppose that you have a website with the domain name

www.example.comexample.comamzn-s3-demo-bucket

To allow read access to these objects from your website, you can add a bucket policy

that allows the s3:GetObject permission with a condition that the

GET request must originate from specific webpages. The following policy

restricts requests by using the StringLike condition with the

aws:Referer condition key.

Make sure that the browsers that you use include the HTTP referer header in

the request.

Warning

We recommend that you use caution when using the aws:Referer condition

key. It is dangerous to include a publicly known HTTP referer header value. Unauthorized

parties can use modified or custom browsers to provide any aws:Referer value

that they choose. Therefore, do not use aws:Referer to prevent unauthorized

parties from making direct Amazon requests.

The aws:Referer condition key is offered only to allow customers to

protect their digital content, such as content stored in Amazon S3, from being referenced on

unauthorized third-party sites. For more information, see aws:Referer in the

IAM User Guide.

Managing user access to specific folders

Grant users access to specific folders

Suppose that you're trying to grant users access to a specific folder. If the IAM user and the S3 bucket belong to the same Amazon Web Services account, then you can use an IAM policy to grant the user access to a specific bucket folder. With this approach, you don't need to update your bucket policy to grant access. You can add the IAM policy to an IAM role that multiple users can switch to.

If the IAM identity and the S3 bucket belong to different Amazon Web Services accounts, then you must grant cross-account access in both the IAM policy and the bucket policy. For more information about granting cross-account access, see Bucket owner granting cross-account bucket permissions.

The following example bucket policy grants

JohnDoehome/). By creating a JohnDoe/home

folder and granting the appropriate permissions to your users, you can have multiple users

share a single bucket. This policy consists of three Allow statements:

-

AllowRootAndHomeListingOfCompanyBucketJohnDoeamzn-s3-demo-buckethomefolder. This statement also allows the user to search on the prefixhome/by using the console. -

AllowListingOfUserFolderJohnDoehome/folder and any subfolders.JohnDoe/ -

AllowAllS3ActionsInUserFolderRead,Write, andDeletepermissions. Permissions are limited to the bucket owner's home folder.

Managing access for access logs

Grant access to Application Load Balancer for enabling access logs

When you enable access logs for Application Load Balancer, you must specify the name of the S3 bucket where the load balancer will store the logs. The bucket must have an attached policy that grants ELB permission to write to the bucket.

In the following example, the bucket policy grants ELB (ELB) permission to write the access logs to the bucket:

Note

Make sure to replace elb-account-id

If your Amazon Web Services Region does not appear in the supported ELB Regions list, use the following policy, which grants permissions to the specified log delivery service.

Then, make sure to configure your ELB access logs by enabling them. You can verify your bucket permissions by creating a test file.

Managing access to an Amazon CloudFront OAI

Grant permission to an Amazon CloudFront OAI

The following example bucket policy grants a CloudFront origin access identity (OAI) permission to get (read) all objects in your S3 bucket. You can use a CloudFront OAI to allow users to access objects in your bucket through CloudFront but not directly through Amazon S3. For more information, see Restricting access to Amazon S3 content by using an Origin Access Identity in the Amazon CloudFront Developer Guide.

The following policy uses the OAI's ID as the policy's Principal. For more

information about using S3 bucket policies to grant access to a CloudFront OAI, see Migrating from origin access identity (OAI) to origin access control (OAC) in the

Amazon CloudFront Developer Guide.

To use this example:

-

Replace

EH1HDMB1FH2TCon the CloudFront console, or use ListCloudFrontOriginAccessIdentities in the CloudFront API. -

Replace

amzn-s3-demo-bucket

Managing access for Amazon S3 Storage Lens

Grant permissions for Amazon S3 Storage Lens

S3 Storage Lens aggregates your metrics and displays the information in the Account snapshot section on the Amazon S3 console Buckets page. S3 Storage Lens also provides an interactive dashboard that you can use to visualize insights and trends, flag outliers, and receive recommendations for optimizing storage costs and applying data protection best practices. Your dashboard has drill-down options to generate and visualize insights at the organization, account, Amazon Web Services Region, storage class, bucket, prefix, or Storage Lens group level. You can also send a daily metrics report in CSV or Parquet format to a general purpose S3 bucket or export the metrics directly to an Amazon-managed S3 table bucket.

S3 Storage Lens can export your aggregated storage usage metrics to an Amazon S3 bucket for further analysis. The bucket where S3 Storage Lens places its metrics exports is known as the destination bucket. When setting up your S3 Storage Lens metrics export, you must have a bucket policy for the destination bucket. For more information, see Monitoring your storage activity and usage with Amazon S3 Storage Lens.

The following example bucket policy grants Amazon S3 permission to write objects

(PUT requests) to a destination bucket. You use a bucket policy like this on

the destination bucket when setting up an S3 Storage Lens metrics export.

When you're setting up an S3 Storage Lens organization-level metrics export, use the following

modification to the previous bucket policy's Resource statement.

"Resource": "arn:aws-cn:s3:::amzn-s3-demo-destination-bucket/destination-prefix/StorageLens/your-organization-id/*",

Managing permissions for S3 Inventory, S3 analytics, and S3 Inventory reports

Grant permissions for S3 Inventory and S3 analytics

S3 Inventory creates lists of the objects in a bucket, and S3 analytics Storage Class Analysis export creates output files of the data used in the analysis. The bucket that the inventory lists the objects for is called the source bucket. The bucket where the inventory file or the analytics export file is written to is called a destination bucket. When setting up an inventory or an analytics export, you must create a bucket policy for the destination bucket. For more information, see Cataloging and analyzing your data with S3 Inventory and Amazon S3 analytics – Storage Class Analysis.

The following example bucket policy grants Amazon S3 permission to write objects

(PUT requests) from the account for the source bucket to the destination

bucket. You use a bucket policy like this on the destination bucket when setting up S3

Inventory and S3 analytics export.

Control S3 Inventory report configuration creation

Cataloging and analyzing your data with S3 Inventory creates lists of

the objects in an S3 bucket and the metadata for each object. The

s3:PutInventoryConfiguration permission allows a user to create an inventory

configuration that includes all object metadata fields that are available by default and to

specify the destination bucket to store the inventory. A user with read access to objects in

the destination bucket can access all object metadata fields that are available in the

inventory report. For more information about the metadata fields that are available in S3

Inventory, see Amazon S3 Inventory list.

To restrict a user from configuring an S3 Inventory report, remove the

s3:PutInventoryConfiguration permission from the user.

Some object metadata fields in S3 Inventory report configurations are optional, meaning

that they're available by default but they can be restricted when you grant a user the

s3:PutInventoryConfiguration permission. You can control whether users can

include these optional metadata fields in their reports by using the

s3:InventoryAccessibleOptionalFields condition key. For a list of the

optional metadata fields available in S3 Inventory, see OptionalFields in the Amazon Simple Storage Service API Reference.

To grant a user permission to create an inventory configuration with specific optional

metadata fields, use the s3:InventoryAccessibleOptionalFields condition key to

refine the conditions in your bucket policy.

The following example policy grants a user (AnaForAllValues:StringEquals condition in the policy uses the

s3:InventoryAccessibleOptionalFields condition key to specify the two allowed

optional metadata fields, namely Size and StorageClass. So, when

AnaSize and

StorageClass.

To restrict a user from configuring an S3 Inventory report that includes specific

optional metadata fields, add an explicit Deny statement to the bucket policy

for the source bucket. The following example bucket policy denies the user

Anaamzn-s3-demo-source-bucketObjectAccessControlList or ObjectOwner

metadata fields. The user Ana

{ "Id": "InventoryConfigSomeFields", "Version": "2012-10-17", "Statement": [{ "Sid": "AllowInventoryCreation", "Effect": "Allow", "Principal": { "AWS": "arn:aws-cn:iam::111122223333:user/Ana" }, "Action": "s3:PutInventoryConfiguration", "Resource": "arn:aws-cn:s3:::", }, { "Sid": "DenyCertainInventoryFieldCreation", "Effect": "Deny", "Principal": { "AWS": "arn:aws-cn:iam::amzn-s3-demo-source-bucket111122223333:user/Ana" }, "Action": "s3:PutInventoryConfiguration", "Resource": "arn:aws-cn:s3:::", "Condition": { "ForAnyValue:StringEquals": { "s3:InventoryAccessibleOptionalFields": [ "ObjectOwner", "ObjectAccessControlList" ] } } } ] }amzn-s3-demo-source-bucket

Note

The use of the s3:InventoryAccessibleOptionalFields condition key in

bucket policies doesn't affect the delivery of inventory reports based on the existing

inventory configurations.

Important

We recommend that you use ForAllValues with an Allow effect

or ForAnyValue with a Deny effect, as shown in the prior

examples.

Don't use ForAllValues with a Deny effect nor

ForAnyValue with an Allow effect, because these combinations

can be overly restrictive and block inventory configuration deletion.

To learn more about the ForAllValues and ForAnyValue

condition set operators, see Multivalued context keys in the IAM User Guide.

Requiring MFA

Amazon S3 supports MFA-protected API access, a feature that can enforce multi-factor

authentication (MFA) for access to your Amazon S3 resources. Multi-factor authentication provides

an extra level of security that you can apply to your Amazon environment. MFA is a security

feature that requires users to prove physical possession of an MFA device by providing a valid

MFA code. For more information, see Amazon Multi-Factor

Authentication

To enforce the MFA requirement, use the aws:MultiFactorAuthAge condition key

in a bucket policy. IAM users can access Amazon S3 resources by using temporary credentials

issued by the Amazon Security Token Service (Amazon STS). You provide the MFA code at the time of the Amazon STS

request.

When Amazon S3 receives a request with multi-factor authentication, the

aws:MultiFactorAuthAge condition key provides a numeric value that indicates

how long ago (in seconds) the temporary credential was created. If the temporary credential

provided in the request was not created by using an MFA device, this key value is null

(absent). In a bucket policy, you can add a condition to check this value, as shown in the

following example.

This example policy denies any Amazon S3 operation on the

/taxdocumentsamzn-s3-demo-bucket

The Null condition in the Condition block evaluates to

true if the aws:MultiFactorAuthAge condition key value is null,

indicating that the temporary security credentials in the request were created without an MFA

device.

The following bucket policy is an extension of the preceding bucket policy. The following

policy includes two policy statements. One statement allows the s3:GetObject

permission on a bucket (amzn-s3-demo-bucketamzn-s3-demo-bucket/taxdocuments

You can optionally use a numeric condition to limit the duration for which the

aws:MultiFactorAuthAge key is valid. The duration that you specify with the

aws:MultiFactorAuthAge key is independent of the lifetime of the temporary

security credential that's used in authenticating the request.

For example, the following bucket policy, in addition to requiring MFA authentication,

also checks how long ago the temporary session was created. The policy denies any operation if

the aws:MultiFactorAuthAge key value indicates that the temporary session was

created more than an hour ago (3,600 seconds).

Preventing users from deleting objects

By default, users have no permissions. But as you create policies, you might grant users permissions that you didn't intend to grant. To avoid such permission loopholes, you can write a stricter access policy by adding an explicit deny.

To explicitly block users or accounts from deleting objects, you must add the following

actions to a bucket policy: s3:DeleteObject, s3:DeleteObjectVersion,

and s3:PutLifecycleConfiguration permissions. All three actions are required

because you can delete objects either by explicitly calling the DELETE Object API

operations or by configuring their lifecycle (see Managing the lifecycle of objects) so that Amazon S3 can remove the objects when their

lifetime expires.

In the following policy example, you explicitly deny DELETE Object

permissions to the user MaryMajorDeny statement always supersedes any other permission granted.