Use the awslogs log driver

By default, Amazon Batch enables the awslogs log driver to send log information to

CloudWatch Logs. You can use this feature to view different logs from your containers in one convenient

location and prevent your container logs from taking up disk space on your container instances.

This topic helps you configure the awslogs log driver in your job

definitions.

Note

In the Amazon Batch console, you can configure the awslogs log driver in the

Logging configuration section when you create a job definition.

Note

The type of information that's logged by the containers in your job depends mostly on

their ENTRYPOINT command. By default, the logs that are captured show the command

output that you normally see in an interactive terminal if you ran the container locally,

which are the STDOUT and STDERR I/O streams. The

awslogs log driver simply passes these logs from Docker to CloudWatch Logs. For more

information about how Docker logs are processed, including alternative ways to capture

different file data or streams, see View logs for a container or

service

To send system logs from your container instances to CloudWatch Logs, see Using CloudWatch Logs with Amazon Batch. For more information about CloudWatch Logs, see Monitoring Log Files and CloudWatch Logs quotas in the Amazon CloudWatch Logs User Guide.

awslogs log driver options in the Amazon Batch JobDefiniton data type

The awslogs log driver supports the following options in Amazon Batch job

definitions. For more information, see CloudWatch Logs logging

driver

awslogs-region-

Required: No

Specify the Region where the

awslogslog driver should send your Docker logs. By default, the Region that's used is the same one as the one for the job. You can choose to send all of your logs from jobs in different Regions to a single Region in CloudWatch Logs. Doing this allows them to be visible all from one location. Alternatively, you can separate them by Region for more granular approach. However, when you choose this option, make sure that the specified log groups exists in the Region that you specified. awslogs-group-

Required: Optional

With the

awslogs-groupoption, you can specify the log group that theawslogslog driver sends its log streams to. If this isn't specified,aws/batch/jobis used. awslogs-stream-prefix-

Required: Optional

With the

awslogs-stream-prefixoption, you can associate a log stream with the specified prefix, and the Amazon ECS task ID of the Amazon Batch job that the container belongs to. If you specify a prefix with this option, then the log stream takes the following format:prefix-name/default/ecs-task-id awslogs-datetime-format-

Required: No

This option defines a multiline start pattern in Python

strftimeformat. A log message consists of a line that matches the pattern and any following lines that don't match the pattern. Thus the matched line is the delimiter between log messages.One example of a use case for using this format is for parsing output such as a stack dump, which might otherwise be logged in multiple entries. The correct pattern allows it to be captured in a single entry.

For more information, see awslogs-datetime-format

. This option always takes precedence if both

awslogs-datetime-formatandawslogs-multiline-patternare configured.Note

Multiline logging performs regular expression parsing and matching of all log messages. This may have a negative impact on logging performance.

awslogs-multiline-pattern-

Required: No

This option defines a multiline start pattern using a regular expression. A log message consists of a line that matches the pattern and any following lines that don't match the pattern. Thus, the matched line is the delimiter between log messages.

For more information, see awslogs-multiline-pattern

in the Docker documentation. This option is ignored if

awslogs-datetime-formatis also configured.Note

Multiline logging performs regular expression parsing and matching of all log messages. This might have a negative impact on logging performance.

awslogs-create-group-

Required: No

Specify whether you want the log group automatically created. If this option isn't specified, it defaults to

false.Warning

This option isn't recommended. We recommend that you create the log group in advance using the CloudWatch Logs CreateLogGroup API action as each job tries to create the log group, increasing the chance that the job fails.

Note

The IAM policy for your execution role must include the

logs:CreateLogGrouppermission before you attempt to useawslogs-create-group.

Specify a log configuration in your job definition

By default, Amazon Batch enables the awslogs log driver. This section describes

how to customize the awslogs log configuration for a job. For more information,

see Create a single-node job definition .

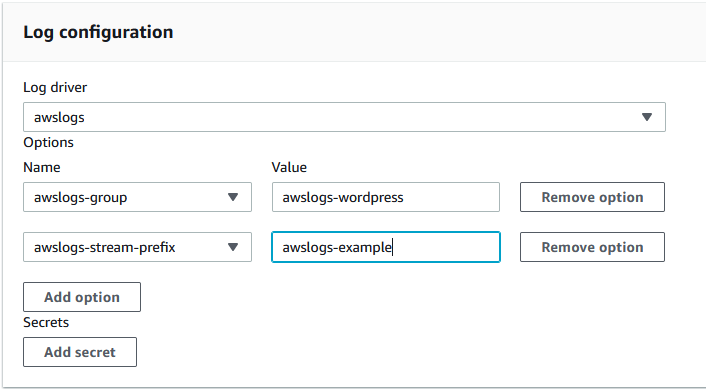

The following log configuration JSON snippets have a logConfiguration object

specified for each job. One is for a WordPress job that sends logs to a log group called

awslogs-wordpress, and another is for a MySQL container that sends logs to a

log group called awslogs-mysql. Both containers use the

awslogs-example log stream prefix.

"logConfiguration": { "logDriver": "awslogs", "options": { "awslogs-group": "awslogs-wordpress", "awslogs-stream-prefix": "awslogs-example" } }

"logConfiguration": { "logDriver": "awslogs", "options": { "awslogs-group": "awslogs-mysql", "awslogs-stream-prefix": "awslogs-example" } }

In the Amazon Batch console, the log configuration for the wordpress job

definition is specified as shown in the following image.

After you have registered a task definition with the awslogs log driver in a

job definition log configuration, you can submit a job with that job definition to start

sending logs to CloudWatch Logs. For more information, see Tutorial: submit a job.