Kinesis Video Streams API and producer libraries support

Kinesis Video Streams provides APIs for you to create and manage streams and read or write media data to and from a stream. The Kinesis Video Streams console, in addition to administration functionality, also supports live and video-on-demand playback. Kinesis Video Streams also provides a set of producer libraries that you can use in your application code to extract data from your media sources and upload to your Kinesis video stream.

Kinesis Video Streams API

Kinesis Video Streams provides APIs for creating and managing Kinesis Video Streams. It also provides APIs for reading and writing media data to a stream, as follows:

-

Producer API – Kinesis Video Streams provides a

PutMediaAPI to write media data to a Kinesis video stream. In aPutMediarequest, the producer sends a stream of media fragments. A fragment is a self-contained sequence of frames. The frames belonging to a fragment should have no dependency on any frames from other fragments. For more information, see PutMedia.As fragments arrive, Kinesis Video Streams assigns a unique fragment number, in increasing order. It also stores producer-side and server-side timestamps for each fragment, as Kinesis Video Streams-specific metadata.

-

Consumer APIs – Consumers can use the following APIs to get data from a stream:

-

GetMedia- When using this API, consumers must identify the starting fragment. The API then returns fragments in the order in which they were added to the stream (in increasing order by fragment number). The media data in the fragments is packed into a structured format such as Matroska (MKV). For more information, see GetMedia. Note

GetMediaknows where the fragments are (archived in the data store or available in real time). For example, ifGetMediadetermines that the starting fragment is archived, it starts returning fragments from the data store. When it must return newer fragments that aren't archived yet,GetMediaswitches to reading fragments from an in-memory stream buffer.This is an example of a continuous consumer, which processes fragments in the order that they are ingested by the stream.

GetMediaenables video-processing applications to fail or fall behind, and then catch up with no additional effort. UsingGetMedia, applications can process data that's archived in the data store, and as the application catches up,GetMediacontinues to feed media data in real time as it arrives. -

GetMediaFromFragmentList(andListFragments) - Batch processing applications are considered offline consumers. Offline consumers might choose to explicitly fetch particular media fragments or ranges of video by combining theListFragmentsandGetMediaFromFragmentListAPIs.ListFragmentsandGetMediaFromFragmentListenable an application to identify segments of video for a particular time range or fragment range, and then fetch those fragments either sequentially or in parallel for processing. This approach is suitable forMapReduceapplication suites, which must quickly process large amounts of data in parallel.For example, suppose that a consumer wants to process one day's worth of video fragments. The consumer would do the following:

-

Get a list of fragments by calling the

ListFragmentsAPI and specifying a time range to select the desired collection of fragments.The API returns metadata from all the fragments in the specified time range. The metadata provides information such as fragment number, producer-side and server-side timestamps, and so on.

-

Take the fragment metadata list and retrieve fragments, in any order. For example, to process all the fragments for the day, the consumer might choose to split the list into sublists and have workers (for example, multiple Amazon EC2 instances) fetch the fragments in parallel using the

GetMediaFromFragmentList, and process them in parallel.

-

-

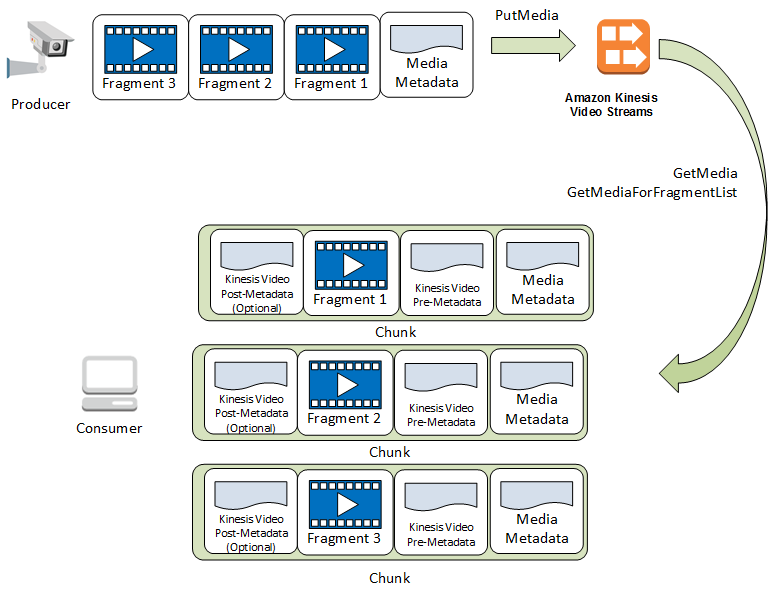

The following diagram shows the data flow for fragments and chunks during these API calls.

When a producer sends a PutMedia request, it sends media metadata in

the payload, and then sends a sequence of media data fragments. Upon receiving the

data, Kinesis Video Streams stores incoming media data as Kinesis Video Streams chunks. Each chunk consists of the

following:

-

A copy of the media metadata

-

A fragment

-

Kinesis Video Streams-specific metadata; for example, the fragment number and server-side and producer-side timestamps

When a consumer requests media metadata, Kinesis Video Streams returns a stream of chunks, starting with the fragment number that you specify in the request.

If you enable data persistence for the stream, after receiving a fragment on the stream, Kinesis Video Streams also saves a copy of the fragment to the data store.

Endpoint discovery pattern

Control Plane REST APIs

To access the Kinesis Video Streams Control Plane REST APIs, use the Kinesis Video Streams service endpoints.

Data Plane REST APIs

Kinesis Video Streams is built using a cellular architecture to ensure better scaling and traffic isolation properties. Because each stream is mapped to a specific cell in a region, your application must use the correct cell-specific endpoints that your stream has been mapped to. When accessing the Data Plane REST APIs, you will need to manage and map the correct endpoints yourself. This process, the endpoint discovery pattern, is described below:

-

The endpoint discovery pattern starts with a call to one of the

GetEndpointsactions. These actions belong to the Control Plane.If you are retrieving the endpoints for the Amazon Kinesis Video Streams Media or Amazon Kinesis Video Streams Archived Media services, use GetDataEndpoint.

If you are retrieving the endpoints for Amazon Kinesis Video Signaling Channels, Amazon Kinesis Video WebRTC Storage, or Kinesis Video Signaling, use GetSignalingChannelEndpoint.

Cache and reuse the endpoint.

If the cached endpoint no longer works, make a new call to

GetEndpointsto refresh the endpoint.

Producer libraries

After you create a Kinesis video stream, you can start sending data to the stream. In your application code, you can use these libraries to extract data from your media sources and upload to your Kinesis video stream. For more information about the available producer libraries, see Upload to Kinesis Video Streams.