Visualize Amazon SageMaker Debugger output tensors in TensorBoard

Important

This page is deprecated in favor of Amazon SageMaker AI with TensoBoard, which provides a comprehensive TensorBoard experience integrated with SageMaker Training and the access control functionalities of SageMaker AI domain. To learn more, see TensorBoard in Amazon SageMaker AI.

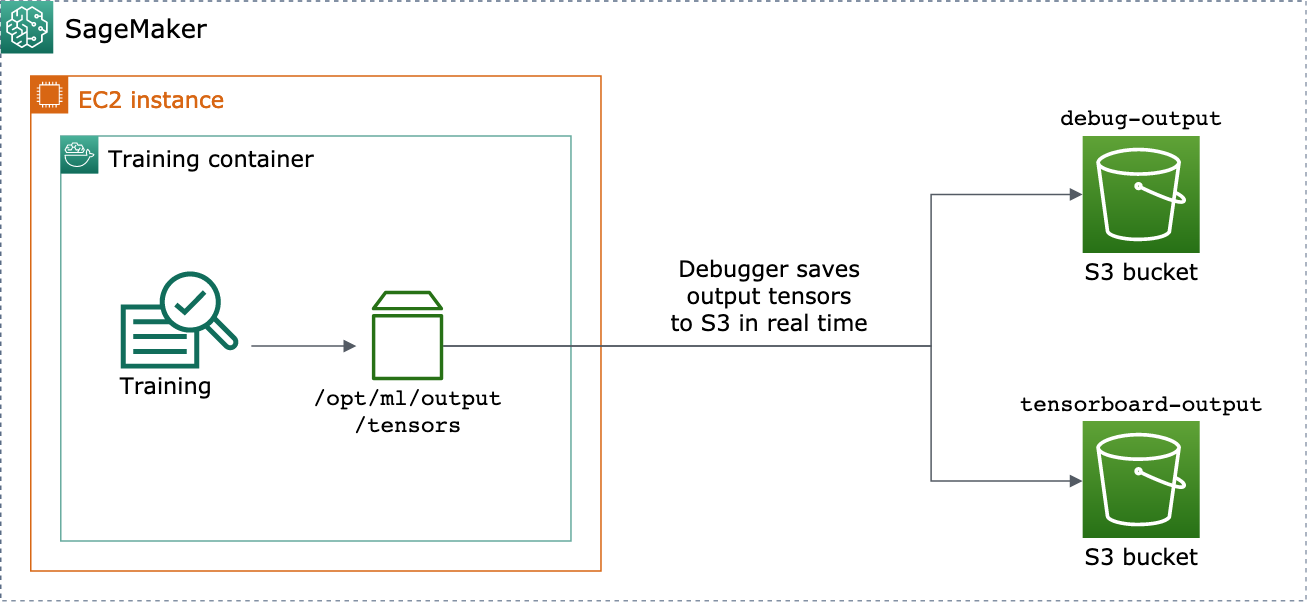

Use SageMaker Debugger to create output tensor files that are compatible with TensorBoard. Load the files to visualize in TensorBoard and analyze your SageMaker training jobs. Debugger automatically generates output tensor files that are compatible with TensorBoard. For any hook configuration you customize for saving output tensors, Debugger has the flexibility to create scalar summaries, distributions, and histograms that you can import to TensorBoard.

You can enable this by passing DebuggerHookConfig and

TensorBoardOutputConfig objects to an estimator.

The following procedure explains how to save scalars, weights, and biases as full tensors,

histograms, and distributions that can be visualized with TensorBoard. Debugger saves them to

the training container's local path (the default path is

/opt/ml/output/tensors) and syncs to the Amazon S3 locations passed through the

Debugger output configuration objects.

To save TensorBoard compatible output tensor files using Debugger

-

Set up a

tensorboard_output_configconfiguration object to save TensorBoard output using the DebuggerTensorBoardOutputConfigclass. For thes3_output_pathparameter, specify the default S3 bucket of the current SageMaker AI session or a preferred S3 bucket. This example does not add thecontainer_local_output_pathparameter; instead, it is set to the default local path/opt/ml/output/tensors.import sagemaker from sagemaker.debugger import TensorBoardOutputConfig bucket = sagemaker.Session().default_bucket() tensorboard_output_config = TensorBoardOutputConfig( s3_output_path='s3://{}'.format(bucket) )For additional information, see the Debugger

TensorBoardOutputConfigAPI in the Amazon SageMaker Python SDK. -

Configure the Debugger hook and customize the hook parameter values. For example, the following code configures a Debugger hook to save all scalar outputs every 100 steps in training phases and 10 steps in validation phases, the

weightsparameters every 500 steps (the defaultsave_intervalvalue for saving tensor collections is 500), and thebiasparameters every 10 global steps until the global step reaches 500.from sagemaker.debugger import CollectionConfig, DebuggerHookConfig hook_config = DebuggerHookConfig( hook_parameters={ "train.save_interval": "100", "eval.save_interval": "10" }, collection_configs=[ CollectionConfig("weights"), CollectionConfig( name="biases", parameters={ "save_interval": "10", "end_step": "500", "save_histogram": "True" } ), ] )For more information about the Debugger configuration APIs, see the Debugger

CollectionConfigandDebuggerHookConfigAPIs in the Amazon SageMaker Python SDK. -

Construct a SageMaker AI estimator with the Debugger parameters passing the configuration objects. The following example template shows how to create a generic SageMaker AI estimator. You can replace

estimatorandEstimatorwith other SageMaker AI frameworks' estimator parent classes and estimator classes. Available SageMaker AI framework estimators for this functionality areTensorFlow,PyTorch, andMXNet.from sagemaker.estimatorimportEstimatorestimator =Estimator( ... # Debugger parameters debugger_hook_config=hook_config, tensorboard_output_config=tensorboard_output_config ) estimator.fit()The

estimator.fit()method starts a training job, and Debugger writes the output tensor files in real time to the Debugger S3 output path and to the TensorBoard S3 output path. To retrieve the output paths, use the following estimator methods:-

For the Debugger S3 output path, use

estimator.latest_job_debugger_artifacts_path(). -

For the TensorBoard S3 output path, use

estimator.latest_job_tensorboard_artifacts_path().

-

-

After the training has completed, check the names of saved output tensors:

from smdebug.trials import create_trial trial = create_trial(estimator.latest_job_debugger_artifacts_path()) trial.tensor_names() -

Check the TensorBoard output data in Amazon S3:

tensorboard_output_path=estimator.latest_job_tensorboard_artifacts_path() print(tensorboard_output_path) !aws s3 ls {tensorboard_output_path}/ -

Download the TensorBoard output data to your notebook instance. For example, the following Amazon CLI command downloads the TensorBoard files to

/logs/fitunder the current working directory of your notebook instance.!aws s3 cp --recursive {tensorboard_output_path}./logs/fit -

Compress the file directory to a TAR file to download to your local machine.

!tar -cf logs.tar logs -

Download and extract the Tensorboard TAR file to a directory on your device, launch a Jupyter notebook server, open a new notebook, and run the TensorBoard app.

!tar -xf logs.tar %load_ext tensorboard %tensorboard --logdir logs/fit

The following animated screenshot illustrates steps 5 through 8. It demonstrates how to download the Debugger TensorBoard TAR file and load the file in a Jupyter notebook on your local device.