Using an Oracle database as a source for Amazon DMS

You can migrate data from one or many Oracle databases using Amazon DMS. With an Oracle database as a source, you can migrate data to any of the targets supported by Amazon DMS.

Amazon DMS supports the following Oracle database editions:

-

Oracle Enterprise Edition

-

Oracle Standard Edition

-

Oracle Express Edition

-

Oracle Personal Edition

For information about versions of Oracle databases that Amazon DMS supports as a source, see Sources for Amazon DMS.

You can use Secure Sockets Layer (SSL) to encrypt connections between your Oracle endpoint and your replication instance. For more information on using SSL with an Oracle endpoint, see SSL support for an Oracle endpoint.

Amazon DMS supports the use of Oracle transparent data encryption (TDE) to encrypt data at rest in the source database. For more information on using Oracle TDE with an Oracle source endpoint, see Supported encryption methods for using Oracle as a source for Amazon DMS.

Amazon supports the use of TLS version 1.2 and later with Oracle endpoints (and all other endpoint types), and recommends using TLS version 1.3 or later.

Follow these steps to configure an Oracle database as an Amazon DMS source endpoint:

-

Create an Oracle user with the appropriate permissions for Amazon DMS to access your Oracle source database.

-

Create an Oracle source endpoint that conforms with your chosen Oracle database configuration. To create a full-load-only task, no further configuration is needed.

-

To create a task that handles change data capture (a CDC-only or full-load and CDC task), choose Oracle LogMiner or Amazon DMS Binary Reader to capture data changes. Choosing LogMiner or Binary Reader determines some of the later permissions and configuration options. For a comparison of LogMiner and Binary Reader, see the following section.

Note

For more information on full-load tasks, CDC-only tasks, and full-load and CDC tasks, see Creating a task

For additional details on working with Oracle source databases and Amazon DMS, see the following sections.

Topics

Working with a self-managed Oracle database as a source for Amazon DMS

Working with an Amazon-managed Oracle database as a source for Amazon DMS

Supported encryption methods for using Oracle as a source for Amazon DMS

Supported compression methods for using Oracle as a source for Amazon DMS

Replicating nested tables using Oracle as a source for Amazon DMS

Storing REDO on Oracle ASM when using Oracle as a source for Amazon DMS

Endpoint settings when using Oracle as a source for Amazon DMS

Using Oracle LogMiner or Amazon DMS Binary Reader for CDC

In Amazon DMS, there are two methods for reading the redo logs when doing change data capture (CDC) for Oracle as a source: Oracle LogMiner and Amazon DMS Binary Reader. LogMiner is an Oracle API to read the online redo logs and archived redo log files. Binary Reader is an Amazon DMS method that reads and parses the raw redo log files directly. These methods have the following features.

| Feature | LogMiner | Binary Reader |

|---|---|---|

| Easy to configure | Yes | No |

| Lower impact on source system I/O and CPU | No | Yes |

| Better CDC performance | No | Yes |

| Supports Oracle table clusters | Yes | No |

| Supports all types of Oracle Hybrid Columnar Compression (HCC) | Yes |

Partially Binary Reader does not support QUERY LOW for tasks with CDC. All other HCC types are fully supported. |

| LOB column support in Oracle 12c only | No (LOB Support is not available with LogMiner in Oracle 12c.) | Yes |

Supports UPDATE statements that affect only LOB

columns |

No | Yes |

| Supports Oracle transparent data encryption (TDE) |

Partially When using Oracle LogMiner, Amazon DMS does not support TDE encryption on column level for Amazon RDS for Oracle. |

Partially Binary Reader supports TDE only for self-managed Oracle databases. |

| Supports all Oracle compression methods | Yes | No |

| Supports XA transactions | No | Yes |

| RAC |

Yes Not recommended, due to performance reasons, and some internal DMS limitations. |

Yes Highly recommended |

Note

By default, Amazon DMS uses Oracle LogMiner for (CDC).

Amazon DMS supports transparent data encryption (TDE) methods when working with an Oracle source database. If the TDE credentials you specify are incorrect, the Amazon DMS migration task does not fail, which can impact ongoing replication of encrypted tables. For more information about specifying TDE credentials, see Supported encryption methods for using Oracle as a source for Amazon DMS.

The main advantages of using LogMiner with Amazon DMS include the following:

-

LogMiner supports most Oracle options, such as encryption options and compression options. Binary Reader does not support all Oracle options, particularly compression and most options for encryption.

-

LogMiner offers a simpler configuration, especially compared to Binary Reader direct-access setup or when the redo logs are managed using Oracle Automatic Storage Management (ASM).

-

LogMiner supports table clusters for use by Amazon DMS. Binary Reader does not.

The main advantages of using Binary Reader with Amazon DMS include the following:

-

For migrations with a high volume of changes, LogMiner might have some I/O or CPU impact on the computer hosting the Oracle source database. Binary Reader has less chance of having I/O or CPU impact because logs are mined directly rather than making multiple database queries.

-

For migrations with a high volume of changes, CDC performance is usually much better when using Binary Reader compared with using Oracle LogMiner.

-

Binary Reader supports CDC for LOBs in Oracle version 12c. LogMiner does not.

In general, use Oracle LogMiner for migrating your Oracle database unless you have one of the following situations:

-

You need to run several migration tasks on the source Oracle database.

-

The volume of changes or the redo log volume on the source Oracle database is high, or you have changes and are also using Oracle ASM.

Note

If you change between using Oracle LogMiner and Amazon DMS Binary Reader, make sure to restart the CDC task.

Configuration for CDC on an Oracle source database

For an Oracle source endpoint to connect to the database for a change data capture (CDC) task, you might need to specify extra connection attributes. This can be true for either a full-load and CDC task or for a CDC-only task. The extra connection attributes that you specify depend on the method you use to access the redo logs: Oracle LogMiner or Amazon DMS Binary Reader.

You specify extra connection attributes when you create a source endpoint. If

you have multiple connection attribute settings, separate them from each other

by semicolons with no additional white space (for example,

oneSetting;thenAnother).

Amazon DMS uses LogMiner by default. You don't have to specify additional extra connection attributes to use it.

To use Binary Reader to access the redo logs, add the following extra connection attributes.

useLogMinerReader=N;useBfile=Y;

Use the following format for the extra connection attributes to access a server that uses ASM with Binary Reader.

useLogMinerReader=N;useBfile=Y;asm_user=asm_username;asm_server=RAC_server_ip_address:port_number/+ASM;

Set the source endpoint Password request parameter to both the

Oracle user password and the ASM password, separated by a comma as

follows.

oracle_user_password,asm_user_password

Where the Oracle source uses ASM, you can work with high-performance options

in Binary Reader for transaction processing at scale. These options include

extra connection attributes to specify the number of parallel threads

(parallelASMReadThreads) and the number of read-ahead buffers

(readAheadBlocks). Setting these attributes together can

significantly improve the performance of the CDC task. The following settings

provide good results for most ASM configurations.

useLogMinerReader=N;useBfile=Y;asm_user=asm_username;asm_server=RAC_server_ip_address:port_number/+ASM; parallelASMReadThreads=6;readAheadBlocks=150000;

For more information on values that extra connection attributes support, see Endpoint settings when using Oracle as a source for Amazon DMS.

In addition, the performance of a CDC task with an Oracle source that uses ASM depends on other settings that you choose. These settings include your Amazon DMS extra connection attributes and the SQL settings to configure the Oracle source. For more information on extra connection attributes for an Oracle source using ASM, see Endpoint settings when using Oracle as a source for Amazon DMS

You also need to choose an appropriate CDC start point. Typically when you do this, you want to identify the point of transaction processing that captures the earliest open transaction to begin CDC from. Otherwise, the CDC task can miss earlier open transactions. For an Oracle source database, you can choose a CDC native start point based on the Oracle system change number (SCN) to identify this earliest open transaction. For more information, see Performing replication starting from a CDC start point.

For more information on configuring CDC for a self-managed Oracle database as a source, see Account privileges required when using Oracle LogMiner to access the redo logs, Account privileges required when using Amazon DMS Binary Reader to access the redo logs, and Additional account privileges required when using Binary Reader with Oracle ASM.

For more information on configuring CDC for an Amazon-managed Oracle database as a source, see Configuring a CDC task to use Binary Reader with an RDS for Oracle source for Amazon DMS and Using an Amazon RDS Oracle Standby (read replica) as a source with Binary Reader for CDC in Amazon DMS.

Workflows for configuring a self-managed or Amazon-managed Oracle source database for Amazon DMS

Configuring an Oracle source database

To configure a self-managed source database instance, use the following workflow steps , depending on how you perform CDC.

Use the following workflow steps to configure an Amazon-managed Oracle source database instance.

| For this workflow step | If you perform CDC using LogMiner, do this | If you perform CDC using Binary Reader, do this |

|---|---|---|

| Grant Oracle account privileges. | For more information, see User account privileges required on an Amazon-managed Oracle source for Amazon DMS. | For more information, see User account privileges required on an Amazon-managed Oracle source for Amazon DMS. |

| Prepare the source database for replication using CDC. | For more information, see Configuring an Amazon-managed Oracle source for Amazon DMS. | For more information, see Configuring an Amazon-managed Oracle source for Amazon DMS. |

| Grant additional Oracle user privileges required for CDC. | No additional account privileges are required. | For more information, see Configuring a CDC task to use Binary Reader with an RDS for Oracle source for Amazon DMS. |

| If you haven't already done so, configure the task to use LogMiner or Binary Reader for CDC. | For more information, see Using Oracle LogMiner or Amazon DMS Binary Reader for CDC. | For more information, see Using Oracle LogMiner or Amazon DMS Binary Reader for CDC. |

| Configure Oracle Standby as a source for CDC. | Amazon DMS does not support Oracle Standby as a source. | For more information, see Using an Amazon RDS Oracle Standby (read replica) as a source with Binary Reader for CDC in Amazon DMS. |

Working with a self-managed Oracle database as a source for Amazon DMS

A self-managed database is a database that you configure and control, either a local on-premises database instance or a database on Amazon EC2. Following, you can find out about the privileges and configurations you need when using a self-managed Oracle database with Amazon DMS.

User account privileges required on a self-managed Oracle source for Amazon DMS

To use an Oracle database as a source in Amazon DMS, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

Note

When granting privileges, use the actual name of objects, not the synonym

for each object. For example, use V_$OBJECT including the

underscore, not V$OBJECT without the underscore.

GRANT CREATE SESSION TOdms_user; GRANT SELECT ANY TRANSACTION TOdms_user; GRANT SELECT ON V_$ARCHIVED_LOG TOdms_user; GRANT SELECT ON V_$LOG TOdms_user; GRANT SELECT ON V_$LOGFILE TOdms_user; GRANT SELECT ON V_$LOGMNR_LOGS TOdms_user; GRANT SELECT ON V_$LOGMNR_CONTENTS TOdms_user; GRANT SELECT ON V_$DATABASE TOdms_user; GRANT SELECT ON V_$THREAD TOdms_user; GRANT SELECT ON V_$PARAMETER TOdms_user; GRANT SELECT ON V_$NLS_PARAMETERS TOdms_user; GRANT SELECT ON V_$TIMEZONE_NAMES TOdms_user; GRANT SELECT ON V_$TRANSACTION TOdms_user; GRANT SELECT ON V_$CONTAINERS TOdms_user; GRANT SELECT ON ALL_INDEXES TOdms_user; GRANT SELECT ON ALL_OBJECTS TOdms_user; GRANT SELECT ON ALL_TABLES TOdms_user; GRANT SELECT ON ALL_USERS TOdms_user; GRANT SELECT ON ALL_CATALOG TOdms_user; GRANT SELECT ON ALL_CONSTRAINTS TOdms_user; GRANT SELECT ON ALL_CONS_COLUMNS TOdms_user; GRANT SELECT ON ALL_TAB_COLS TOdms_user; GRANT SELECT ON ALL_IND_COLUMNS TOdms_user; GRANT SELECT ON ALL_ENCRYPTED_COLUMNS TOdms_user; GRANT SELECT ON ALL_LOG_GROUPS TOdms_user; GRANT SELECT ON ALL_TAB_PARTITIONS TOdms_user; GRANT SELECT ON SYS.DBA_REGISTRY TOdms_user; GRANT SELECT ON SYS.OBJ$ TOdms_user; GRANT SELECT ON DBA_TABLESPACES TOdms_user; GRANT SELECT ON DBA_OBJECTS TOdms_user; -– Required if the Oracle version is earlier than 11.2.0.3. GRANT SELECT ON SYS.ENC$ TOdms_user; -– Required if transparent data encryption (TDE) is enabled. For more information on using Oracle TDE with Amazon DMS, see Supported encryption methods for using Oracle as a source for Amazon DMS. GRANT SELECT ON GV_$TRANSACTION TOdms_user; -– Required if the source database is Oracle RAC in Amazon DMS versions 3.4.6 and higher. GRANT SELECT ON V_$DATAGUARD_STATS TOdms_user; -- Required if the source database is Oracle Data Guard and Oracle Standby is used in the latest release of DMS version 3.4.6, version 3.4.7, and higher. GRANT SELECT ON V_$DATABASE_INCARNATION TOdms_user;

Grant the additional following privilege for each replicated table when you are using a specific table list.

GRANT SELECT onany-replicated-tabletodms_user;

Grant the additional following privilege to use validation feature.

GRANT EXECUTE ON SYS.DBMS_CRYPTO TOdms_user;

Grant the additional following privilege if you use binary reader instead of LogMiner.

GRANT SELECT ON SYS.DBA_DIRECTORIES TOdms_user;

Grant the additional following privilege to expose views.

GRANT SELECT on ALL_VIEWS todms_user;

To expose views, you must also add the exposeViews=true extra

connection attribute to your source endpoint.

Grant the additional following privilege when using serverless replications.

GRANT SELECT on dba_segments todms_user; GRANT SELECT on v_$tablespace todms_user; GRANT SELECT on dba_tab_subpartitions todms_user; GRANT SELECT on dba_extents todms_user;

For information about serverless replications, see Working with Amazon DMS Serverless.

Grant the additional following privileges when using Oracle-specific premigration assessments.

GRANT SELECT on gv_$parameter todms_user; GRANT SELECT on v_$instance todms_user; GRANT SELECT on v_$version todms_user; GRANT SELECT on gv_$ASM_DISKGROUP todms_user; GRANT SELECT on gv_$database todms_user; GRANT SELECT on dba_db_links todms_user; GRANT SELECT on gv_$log_History todms_user; GRANT SELECT on gv_$log todms_user; GRANT SELECT ON DBA_TYPES TOdms_user; GRANT SELECT ON DBA_USERS to dms_user; GRANT SELECT ON DBA_DIRECTORIES to dms_user; GRANT EXECUTE ON SYS.DBMS_XMLGEN TO dms_user;

For information about Oracle-specific premigration assessments, see Oracle assessments.

Prerequisites for handling open transactions for Oracle Standby

When using Amazon DMS versions 3.4.6 and higher, perform the following steps to handle open transactions for Oracle Standby.

-

Create a database link named,

AWSDMS_DBLINKon the primary database.DMS_USERCREATE PUBLIC DATABASE LINK AWSDMS_DBLINK CONNECT TODMS_USERIDENTIFIED BYDMS_USER_PASSWORDUSING '(DESCRIPTION= (ADDRESS=(PROTOCOL=TCP)(HOST=PRIMARY_HOST_NAME_OR_IP)(PORT=PORT)) (CONNECT_DATA=(SERVICE_NAME=SID)) )'; -

Verify the connection to the database link using

DMS_USERselect 1 from dual@AWSDMS_DBLINK

Preparing an Oracle self-managed source database for CDC using Amazon DMS

Prepare your self-managed Oracle database as a source to run a CDC task by doing the following:

Verifying that Amazon DMS supports the source database version

Run a query like the following to verify that the current version of the Oracle source database is supported by Amazon DMS.

SELECT name, value, description FROM v$parameter WHERE name = 'compatible';

Here, name, value, and description

are columns somewhere in the database that are being queried based on the value

of name. If this query runs without error, Amazon DMS supports the

current version of the database and you can continue with the migration. If the

query raises an error, Amazon DMS does not support the current version of the

database. To proceed with migration, first convert the Oracle database to an

version supported by Amazon DMS.

Making sure that ARCHIVELOG mode is on

You can run Oracle in two different modes: the ARCHIVELOG

mode and the NOARCHIVELOG mode. To run a CDC task, run the

database in ARCHIVELOG mode. To know if the database is in

ARCHIVELOG mode, execute the following query.

SQL> SELECT log_mode FROM v$database;

If NOARCHIVELOG mode is returned, set the database to ARCHIVELOG

per Oracle instructions.

Setting up supplemental logging

To capture ongoing changes, Amazon DMS requires that you enable minimal supplemental logging on your Oracle source database. In addition, you need to enable supplemental logging on each replicated table in the database.

By default, Amazon DMS adds PRIMARY KEY supplemental logging on

all replicated tables. To allow Amazon DMS to add PRIMARY KEY

supplemental logging, grant the following privilege for each replicated

table.

ALTER onany-replicated-table;

You can disable the default PRIMARY KEY supplemental logging

added by Amazon DMS using the extra connection attribute

addSupplementalLogging. For more information, see Endpoint settings

when using Oracle as a source for Amazon DMS.

Make sure to turn on supplemental logging if your replication task updates

a table using a WHERE clause that does not reference a primary key

column.

To manually set up supplemental logging

-

Run the following query to verify if supplemental logging is already enabled for the database.

SELECT supplemental_log_data_min FROM v$database;If the result returned is

YESorIMPLICIT, supplemental logging is enabled for the database.If not, enable supplemental logging for the database by running the following command.

ALTER DATABASE ADD SUPPLEMENTAL LOG DATA; -

Make sure that the required supplemental logging is added for each replicated table.

Consider the following:

-

If

ALL COLUMNSsupplemental logging is added to the table, you don't need to add more logging. -

If a primary key exists, add supplemental logging for the primary key. You can do this either by using the format to add supplemental logging on the primary key itself, or by adding supplemental logging on the primary key columns on the database.

ALTER TABLE Tablename ADD SUPPLEMENTAL LOG DATA (PRIMARY KEY) COLUMNS; ALTER DATABASE ADD SUPPLEMENTAL LOG DATA (PRIMARY KEY) COLUMNS; -

If no primary key exists and the table has a single unique index, add all of the unique index's columns to the supplemental log.

ALTER TABLETableNameADD SUPPLEMENTAL LOG GROUPLogGroupName(UniqueIndexColumn1[,UniqueIndexColumn2] ...) ALWAYS;Using

SUPPLEMENTAL LOG DATA (UNIQUE INDEX) COLUMNSdoes not add the unique index columns to the log. -

If no primary key exists and the table has multiple unique indexes, Amazon DMS selects the first unique index in an alphabetically ordered ascending list. You need to add supplemental logging on the selected index’s columns as in the previous item.

Using

SUPPLEMENTAL LOG DATA (UNIQUE INDEX) COLUMNSdoes not add the unique index columns to the log. -

If no primary key exists and there is no unique index, add supplemental logging on all columns.

ALTER TABLETableNameADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;In some cases, the target table primary key or unique index is different than the source table primary key or unique index. In such cases, add supplemental logging manually on the source table columns that make up the target table primary key or unique index.

Also, if you change the target table primary key, add supplemental logging on the target unique index's columns instead of the columns of the source primary key or unique index.

-

If a filter or transformation is defined for a table, you might need to enable additional logging.

Consider the following:

-

If

ALL COLUMNSsupplemental logging is added to the table, you don't need to add more logging. -

If the table has a unique index or a primary key, add supplemental logging on each column that is involved in a filter or transformation. However, do so only if those columns are different from the primary key or unique index columns.

-

If a transformation includes only one column, don't add this column to a supplemental logging group. For example, for a transformation

A+B, add supplemental logging on both columnsAandB. However, for a transformationsubstring(A,10)don't add supplemental logging on columnA. -

To set up supplemental logging on primary key or unique index columns and other columns that are filtered or transformed, you can set up

USER_LOG_GROUPsupplemental logging. Add this logging on both the primary key or unique index columns and any other specific columns that are filtered or transformed.For example, to replicate a table named

TEST.LOGGINGwith primary keyIDand a filter by the columnNAME, you can run a command similar to the following to create the log group supplemental logging.ALTER TABLE TEST.LOGGING ADD SUPPLEMENTAL LOG GROUP TEST_LOG_GROUP (ID, NAME) ALWAYS;

Account privileges required when using Oracle LogMiner to access the redo logs

To access the redo logs using the Oracle LogMiner, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

GRANT EXECUTE on DBMS_LOGMNR to dms_user; GRANT SELECT on V_$LOGMNR_LOGS to dms_user; GRANT SELECT on V_$LOGMNR_CONTENTS to dms_user; GRANT LOGMINING to dms_user; -– Required only if the Oracle version is 12c or higher.

Account privileges required when using Amazon DMS Binary Reader to access the redo logs

To access the redo logs using the Amazon DMS Binary Reader, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

GRANT SELECT on v_$transportable_platform to dms_user; -– Grant this privilege if the redo logs are stored in Oracle Automatic Storage Management (ASM) and AWS DMS accesses them from ASM. GRANT CREATE ANY DIRECTORY to dms_user; -– Grant this privilege to allow AWS DMS to use Oracle BFILE read file access in certain cases. This access is required when the replication instance does not have file-level access to the redo logs and the redo logs are on non-ASM storage. GRANT EXECUTE on DBMS_FILE_TRANSFER to dms_user; -– Grant this privilege to copy the redo log files to a temporary folder using the CopyToTempFolder method. GRANT EXECUTE on DBMS_FILE_GROUP to dms_user;

Binary Reader works with Oracle file features that include Oracle directories.

Each Oracle directory object includes the name of the folder containing the redo log

files to process. These Oracle directories are not represented at the file system

level. Instead, they are logical directories that are created at the Oracle database

level. You can view them in the Oracle ALL_DIRECTORIES view.

If you want Amazon DMS to create these Oracle directories, grant the CREATE

ANY DIRECTORY privilege specified preceding. Amazon DMS creates the

directory names with the DMS_ prefix. If you don't

grant the CREATE ANY DIRECTORY privilege, create the corresponding

directories manually. In some cases when you create the Oracle directories

manually, the Oracle user specified in the Oracle source endpoint isn't the

user that created these directories. In these cases, also grant the READ

on DIRECTORY privilege.

Note

Amazon DMS CDC does not support Active Dataguard Standby that is not configured to use automatic redo transport service.

In some cases, you might use Oracle Managed Files (OMF) for storing the logs.

Or your source endpoint is in ADG and thus you can't grant the CREATE ANY DIRECTORY

privilege. In these cases, manually create the directories with all the possible log

locations before starting the Amazon DMS replication task. If Amazon DMS does not find a

precreated directory that it expects, the task stops. Also, Amazon DMS does not delete

the entries it has created in the ALL_DIRECTORIES view, so manually

delete them.

Additional account privileges required when using Binary Reader with Oracle ASM

To access the redo logs in Automatic Storage Management (ASM) using Binary Reader, grant the following privileges to the Oracle user specified in the Oracle endpoint connection settings.

SELECT ON v_$transportable_platform SYSASM -– To access the ASM account with Oracle 11g Release 2 (version 11.2.0.2) and higher, grant the Oracle endpoint user the SYSASM privilege. For older supported Oracle versions, it's typically sufficient to grant the Oracle endpoint user the SYSDBA privilege.

You can validate ASM account access by opening a command prompt and invoking one of the following statements, depending on your Oracle version as specified preceding.

If you need the SYSDBA privilege, use the following.

sqlplusasmuser/asmpassword@+asmserveras sysdba

If you need the SYSASM privilege, use the following.

sqlplusasmuser/asmpassword@+asmserveras sysasm

Using a self-managed Oracle Standby as a source with Binary Reader for CDC in Amazon DMS

To configure an Oracle Standby instance as a source when using Binary Reader for CDC, start with the following prerequisites:

-

Amazon DMS currently supports only Oracle Active Data Guard Standby.

-

Make sure that the Oracle Data Guard configuration uses:

-

Redo transport services for automated transfers of redo data.

-

Apply services to automatically apply redo to the standby database.

-

To confirm those requirements are met, execute the following query.

SQL> select open_mode, database_role from v$database;

From the output of that query, confirm that the standby database is opened in READ ONLY mode and redo is being applied automatically. For example:

OPEN_MODE DATABASE_ROLE -------------------- ---------------- READ ONLY WITH APPLY PHYSICAL STANDBY

To configure an Oracle Standby instance as a source when using Binary Reader for CDC

-

Grant additional privileges required to access standby log files.

GRANT SELECT ON v_$standby_log TOdms_user; -

Create a source endpoint for the Oracle Standby by using the Amazon Web Services Management Console or Amazon CLI. When creating the endpoint, specify the following extra connection attributes.

useLogminerReader=N;useBfile=Y;Note

In Amazon DMS, you can use extra connection attributes to specify if you want to migrate from the archive logs instead of the redo logs. For more information, see Endpoint settings when using Oracle as a source for Amazon DMS.

-

Configure archived log destination.

DMS binary reader for Oracle source without ASM uses Oracle Directories to access archived redo logs. If your database is configured to use Fast Recovery Area (FRA) as an archive log destination, the location of archive redo files isn't constant. Each day that archived redo logs are generated results in a new directory being created in the FRA, using the directory name format YYYY_MM_DD. For example:

DB_RECOVERY_FILE_DEST/SID/archivelog/YYYY_MM_DDWhen DMS needs access to archived redo files in the newly created FRA directory and the primary read-write database is being used as a source, DMS creates a new or replaces an existing Oracle directory, as follows.

CREATE OR REPLACE DIRECTORYdmsrep_taskidAS ‘DB_RECOVERY_FILE_DEST/SID/archivelog/YYYY_MM_DD’;When the standby database is being used as a source, DMS is unable to create or replace the Oracle directory because the database is in read-only mode. But, you can choose to perform one of these additional steps:

-

Modify

log_archive_dest_id_1to use an actual path instead of FRA in such a configuration that Oracle won't create daily subdirectories:ALTER SYSTEM SET log_archive_dest_1=’LOCATION=full directory path’Then, create an Oracle directory object to be used by DMS:

CREATE OR REPLACE DIRECTORY dms_archived_logs AS ‘full directory path’; -

Create an additional archive log destination and an Oracle directory object pointing to that destination. For example:

ALTER SYSTEM SET log_archive_dest_3=’LOCATION=full directory path’; CREATE DIRECTORY dms_archived_log AS ‘full directory path’;Then add an extra connection attribute to the task source endpoint:

archivedLogDestId=3 -

Manually pre-create Oracle directory objects to be used by DMS.

CREATE DIRECTORYdms_archived_log_20210301AS ‘DB_RECOVERY_FILE_DEST/SID/archivelog/2021_03_01’; CREATE DIRECTORYdms_archived_log_20210302AS ‘DB_RECOVERY_FILE_DEST>/SID>/archivelog/2021_03_02’; ... -

Create an Oracle scheduler job that runs daily and creates the required directory.

-

-

Configure online log destination.

Create Oracle directory that points to OS directory with standby redo logs:

CREATE OR REPLACE DIRECTORY STANDBY_REDO_DIR AS '<full directory path>'; GRANT READ ON DIRECTORY STANDBY_REDO_DIR TO <dms_user>;

Using a user-managed database on Oracle Cloud Infrastructure (OCI) as a source for CDC in Amazon DMS

A user-managed database is a database that you configure and control, such as an Oracle database created on a virtual machine (VM), bare metal, or Exadata server. Or, databases that you configure and control that run on dedicated infrastructure, like Oracle Cloud Infrastructure (OCI). The following information describes the privileges and configurations you need when using an Oracle user-managed database on OCI as a source for change data capture (CDC) in Amazon DMS.

To configure an OCI hosted user-managed Oracle database as a source for change data capture

-

Grant required user account privileges for a user-managed Oracle source database on OCI. For more information, see Account privileges for a self-managed Oracle source endpoint.

-

Grant account privileges required when using Binary Reader to access the redo logs. For more information, see Account privileges required when using Binary Reader.

-

Add account privileges that are required when using Binary Reader with Oracle Automatic Storage Management (ASM). For more information, see Additional account privileges required when using Binary Reader with Oracle ASM.

-

Set-up supplemental logging. For more information, see Setting up supplemental logging.

-

Set-up TDE encryption. For more information, see Encryption methods when using an Oracle database as a source endpoint.

The following limitations apply when replicating data from an Oracle source database on Oracle Cloud Infrastructure (OCI).

Limitations

-

DMS does not support using Oracle LogMiner to access the redo logs.

-

DMS does not support Autonomous DB.

Working with an Amazon-managed Oracle database as a source for Amazon DMS

An Amazon-managed database is a database that is on an Amazon service such as Amazon RDS, Amazon Aurora, or Amazon S3. Following, you can find the privileges and configurations that you need to set up when using an Amazon-managed Oracle database with Amazon DMS.

User account privileges required on an Amazon-managed Oracle source for Amazon DMS

Grant the following privileges to the Oracle user account specified in the Oracle source endpoint definition.

Important

For all parameter values such as

dms_userany-replicated-tabledms_userCREATE USER

or myuserCREATE USER MYUSER.

In this case, Oracle identifies and stores the value as all uppercase

(MYUSER). If you use quotation marks, as in CREATE USER

"MyUser" or CREATE USER 'MyUser', Oracle identifies and

stores the case-sensitive value that you specify (MyUser).

GRANT CREATE SESSION todms_user; GRANT SELECT ANY TRANSACTION todms_user; GRANT SELECT on DBA_TABLESPACES todms_user; GRANT SELECT ONany-replicated-tabletodms_user; GRANT EXECUTE on rdsadmin.rdsadmin_util todms_user; -- For Oracle 12c or higher: GRANT LOGMINING to dms_user; – Required only if the Oracle version is 12c or higher.

In addition, grant SELECT and EXECUTE permissions on

SYS objects using the Amazon RDS procedure

rdsadmin.rdsadmin_util.grant_sys_object as shown. For more

information, see Granting SELECT or EXECUTE privileges to SYS

objects.

exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_VIEWS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_TAB_PARTITIONS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_INDEXES', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_OBJECTS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_TABLES', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_USERS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_CATALOG', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_CONSTRAINTS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_CONS_COLUMNS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_TAB_COLS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_IND_COLUMNS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_LOG_GROUPS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$ARCHIVED_LOG', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$LOG', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$LOGFILE', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$DATABASE', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$THREAD', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$PARAMETER', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$NLS_PARAMETERS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$TIMEZONE_NAMES', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$TRANSACTION', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$CONTAINERS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('DBA_REGISTRY', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('OBJ$', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('ALL_ENCRYPTED_COLUMNS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$LOGMNR_LOGS', 'dms_user', 'SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('V_$LOGMNR_CONTENTS','dms_user','SELECT'); exec rdsadmin.rdsadmin_util.grant_sys_object('DBMS_LOGMNR', 'dms_user', 'EXECUTE'); -- (as of Oracle versions 12.1 and higher) exec rdsadmin.rdsadmin_util.grant_sys_object('REGISTRY$SQLPATCH', 'dms_user', 'SELECT'); -- (for Amazon RDS Active Dataguard Standby (ADG)) exec rdsadmin.rdsadmin_util.grant_sys_object('V_$STANDBY_LOG', 'dms_user', 'SELECT'); -- (for transparent data encryption (TDE)) exec rdsadmin.rdsadmin_util.grant_sys_object('ENC$', 'dms_user', 'SELECT'); -- (for validation with LOB columns) exec rdsadmin.rdsadmin_util.grant_sys_object('DBMS_CRYPTO', 'dms_user', 'EXECUTE'); -- (for binary reader) exec rdsadmin.rdsadmin_util.grant_sys_object('DBA_DIRECTORIES','dms_user','SELECT'); -- Required when the source database is Oracle Data guard, and Oracle Standby is used in the latest release of DMS version 3.4.6, version 3.4.7, and higher. exec rdsadmin.rdsadmin_util.grant_sys_object('V_$DATAGUARD_STATS', 'dms_user', 'SELECT');

For more information on using Amazon RDS Active Dataguard Standby (ADG) with Amazon DMS see Using an Amazon RDS Oracle Standby (read replica) as a source with Binary Reader for CDC in Amazon DMS.

For more information on using Oracle TDE with Amazon DMS, see Supported encryption methods for using Oracle as a source for Amazon DMS.

Prerequisites for handling open transactions for Oracle Standby

When using Amazon DMS versions 3.4.6 and higher, perform the following steps to handle open transactions for Oracle Standby.

-

Create a database link named,

AWSDMS_DBLINKon the primary database.DMS_USERCREATE PUBLIC DATABASE LINK AWSDMS_DBLINK CONNECT TODMS_USERIDENTIFIED BYDMS_USER_PASSWORDUSING '(DESCRIPTION= (ADDRESS=(PROTOCOL=TCP)(HOST=PRIMARY_HOST_NAME_OR_IP)(PORT=PORT)) (CONNECT_DATA=(SERVICE_NAME=SID)) )'; -

Verify the connection to the database link using

DMS_USERselect 1 from dual@AWSDMS_DBLINK

Configuring an Amazon-managed Oracle source for Amazon DMS

Before using an Amazon-managed Oracle database as a source for Amazon DMS, perform the following tasks for the Oracle database:

-

Enable automatic backups. For more information about enabling automatic backups, see Enabling automated backups in the Amazon RDS User Guide.

-

Set up supplemental logging.

-

Set up archiving. Archiving the redo logs for your Amazon RDS for Oracle DB instance allows Amazon DMS to retrieve the log information using Oracle LogMiner or Binary Reader.

To set up archiving

-

Run the

rdsadmin.rdsadmin_util.set_configurationcommand to set up archiving.For example, to retain the archived redo logs for 24 hours, run the following command.

exec rdsadmin.rdsadmin_util.set_configuration('archivelog retention hours',24); commit;Note

The commit is required for a change to take effect.

-

Make sure that your storage has enough space for the archived redo logs during the specified retention period. For example, if your retention period is 24 hours, calculate the total size of your accumulated archived redo logs over a typical hour of transaction processing and multiply that total by 24. Compare this calculated 24-hour total with your available storage space and decide if you have enough storage space to handle a full 24 hours transaction processing.

To set up supplemental logging

-

Run the following command to enable supplemental logging at the database level.

exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD'); -

Run the following command to enable primary key supplemental logging.

exec rdsadmin.rdsadmin_util.alter_supplemental_logging('ADD','PRIMARY KEY'); -

(Optional) Enable key-level supplemental logging at the table level.

Your source database incurs a small bit of overhead when key-level supplemental logging is enabled. Therefore, if you are migrating only a subset of your tables, you might want to enable key-level supplemental logging at the table level. To enable key-level supplemental logging at the table level, run the following command.

alter table table_name add supplemental log data (PRIMARY KEY) columns;

Configuring a CDC task to use Binary Reader with an RDS for Oracle source for Amazon DMS

You can configure Amazon DMS to access the source Amazon RDS for Oracle instance redo logs using Binary Reader for CDC.

Note

To use Oracle LogMiner, the minimum required user account privileges are sufficient. For more information, see User account privileges required on an Amazon-managed Oracle source for Amazon DMS.

To use Amazon DMS Binary Reader, specify additional settings and extra connection attributes for the Oracle source endpoint, depending on your Amazon DMS version.

Binary Reader support is available in the following versions of Amazon RDS for Oracle:

-

Oracle 11.2 – Versions 11.2.0.4V11 and higher

-

Oracle 12.1 – Versions 12.1.0.2.V7 and higher

-

Oracle 12.2 – All versions

-

Oracle 18.0 – All versions

-

Oracle 19.0 – All versions

To configure CDC using Binary Reader

-

Log in to your Amazon RDS for Oracle source database as the master user and run the following stored procedures to create the server-level directories.

exec rdsadmin.rdsadmin_master_util.create_archivelog_dir; exec rdsadmin.rdsadmin_master_util.create_onlinelog_dir; -

Grant the following privileges to the Oracle user account that is used to access the Oracle source endpoint.

GRANT READ ON DIRECTORY ONLINELOG_DIR TOdms_user; GRANT READ ON DIRECTORY ARCHIVELOG_DIR TOdms_user; -

Set the following extra connection attributes on the Amazon RDS Oracle source endpoint:

-

For RDS Oracle versions 11.2 and 12.1, set the following.

useLogminerReader=N;useBfile=Y;accessAlternateDirectly=false;useAlternateFolderForOnline=true; oraclePathPrefix=/rdsdbdata/db/{$DATABASE_NAME}_A/;usePathPrefix=/rdsdbdata/log/;replacePathPrefix=true; -

For RDS Oracle versions 12.2, 18.0, and 19.0, set the following.

useLogminerReader=N;useBfile=Y;

-

Note

Make sure there's no white space following the semicolon separator (;) for

multiple attribute settings, for example

oneSetting;thenAnother.

For more information configuring a CDC task, see Configuration for CDC on an Oracle source database.

Using an Amazon RDS Oracle Standby (read replica) as a source with Binary Reader for CDC in Amazon DMS

Verify the following prerequisites for using Amazon RDS for Oracle Standby as a source when using Binary Reader for CDC in Amazon DMS:

-

Use the Oracle master user to set up Binary Reader.

-

Make sure that Amazon DMS currently supports using only Oracle Active Data Guard Standby.

After you do so, use the following procedure to use RDS for Oracle Standby as a source when using Binary Reader for CDC.

To configure an RDS for Oracle Standby as a source when using Binary Reader for CDC

-

Sign in to RDS for Oracle primary instance as the master user.

-

Run the following stored procedures as documented in the Amazon RDS User Guide to create the server level directories.

exec rdsadmin.rdsadmin_master_util.create_archivelog_dir; exec rdsadmin.rdsadmin_master_util.create_onlinelog_dir; -

Identify the directories created in step 2.

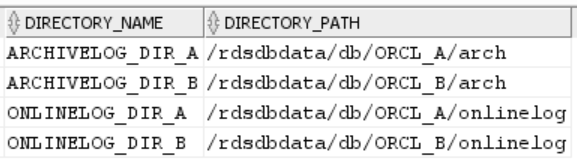

SELECT directory_name, directory_path FROM all_directories WHERE directory_name LIKE ( 'ARCHIVELOG_DIR_%' ) OR directory_name LIKE ( 'ONLINELOG_DIR_%' )For example, the preceding code displays a list of directories like the following.

-

Grant the

Readprivilege on the preceding directories to the Oracle user account that is used to access the Oracle Standby.GRANT READ ON DIRECTORY ARCHIVELOG_DIR_A TOdms_user; GRANT READ ON DIRECTORY ARCHIVELOG_DIR_B TOdms_user; GRANT READ ON DIRECTORY ONLINELOG_DIR_A TOdms_user; GRANT READ ON DIRECTORY ONLINELOG_DIR_B TOdms_user; -

Perform an archive log switch on the primary instance. Doing this makes sure that the changes to

ALL_DIRECTORIESare also ported to the Oracle Standby. -

Run an

ALL_DIRECTORIESquery on the Oracle Standby to confirm that the changes were applied. -

Create a source endpoint for the Oracle Standby by using the Amazon DMS Management Console or Amazon Command Line Interface (Amazon CLI). While creating the endpoint, specify the following extra connection attributes.

useLogminerReader=N;useBfile=Y;archivedLogDestId=1;additionalArchivedLogDestId=2 -

After creating the endpoint, use Test endpoint connection on the Create endpoint page of the console or the Amazon CLI

test-connectioncommand to verify that connectivity is established.

Limitations on using Oracle as a source for Amazon DMS

The following limitations apply when using an Oracle database as a source for Amazon DMS:

-

Amazon DMS supports Oracle Extended data types in Amazon DMS version 3.5.0 and higher.

-

Amazon DMS does not support long object names (over 30 bytes).

-

Amazon DMS does not support function-based indexes.

-

If you manage supplemental logging and carry out transformations on any of the columns, make sure that supplemental logging is activated for all fields and columns. For more information on setting up supplemental logging, see the following topics:

-

For a self-managed Oracle source database, see Setting up supplemental logging.

-

For an Amazon-managed Oracle source database, see Configuring an Amazon-managed Oracle source for Amazon DMS.

-

-

Amazon DMS does not support the multi-tenant container root database (CDB$ROOT). It does support a PDB using the Binary Reader.

-

Amazon DMS does not support deferred constraints.

-

In Amazon DMS version 3.5.1 and higher, secure LOBs are supported only by performing a LOB lookup.

-

Amazon DMS supports the

rename table table-name to new-table-namesyntax for all supported Oracle versions 11 and higher. This syntax isn't supported for any Oracle version 10 source databases. -

Amazon DMS does not replicate results of the DDL statement

ALTER TABLE ADD. Instead of replicatingcolumndata_typeDEFAULTdefault_valuedefault_valueNULL. -

When using Amazon DMS version 3.4.7 or higher, to replicate changes that result from partition or subpartition operations, do the following before starting a DMS task.

-

Manually create the partitioned table structure (DDL);

-

Make sure the DDL is the same on both Oracle source and Oracle target;

-

Set the extra connection attribute

enableHomogenousPartitionOps=true.

For more information about

enableHomogenousPartitionOps, see Endpoint settings when using Oracle as a source for Amazon DMS. Also, note that on FULL+CDC tasks, DMS does not replicate data changes captured as part of the cached changes. In that use case, recreate the table structure on the Oracle target and reload the tables in question.Prior to Amazon DMS version 3.4.7:

DMS does not replicate data changes that result from partition or subpartition operations (

ADD,DROP,EXCHANGE, andTRUNCATE). Such updates might cause the following errors during replication:-

For

ADDoperations, updates and deletes on the added data might raise a "0 rows affected" warning. -

For

DROPandTRUNCATEoperations, new inserts might raise "duplicates" errors. -

EXCHANGEoperations might raise both a "0 rows affected" warning and "duplicates" errors.

To replicate changes that result from partition or subpartition operations, reload the tables in question. After adding a new empty partition, operations on the newly added partition are replicated to the target as normal.

-

-

Amazon DMS versions prior to 3.4 don't support data changes on the target that result from running the

CREATE TABLE ASstatement on the source. However, the new table is created on the target. -

Amazon DMS does not capture changes made by the Oracle

DBMS_REDEFINITIONpackage, for example the table metadata and theOBJECT_IDfield. -

When Limited-size LOB mode is enabled, empty BLOB/CLOB columns on the Oracle source are replicated as NULL values. When Full LOB mode is enabled, they are replicated as an empty string (' ').

-

When capturing changes with Oracle 11 LogMiner, an update on a CLOB column with a string length greater than 1982 is lost, and the target is not updated.

-

During change data capture (CDC), Amazon DMS does not support batch updates to numeric columns defined as a primary key.

-

Amazon DMS does not support certain

UPDATEcommands. The following example is an unsupportedUPDATEcommand.UPDATE TEST_TABLE SET KEY=KEY+1;Here,

TEST_TABLEis the table name andKEYis a numeric column defined as a primary key. -

Amazon DMS does not support full LOB mode for loading LONG and LONG RAW columns. Instead, you can use limited LOB mode for migrating these datatypes to an Oracle target. In limited LOB mode, Amazon DMS truncates any data to 64 KB that you set to LONG or LONG RAW columns longer than 64 KB.

-

Amazon DMS does not support full LOB mode for loading XMLTYPE columns. Instead, you can use limited LOB mode for migrating XMLTYPE columns to an Oracle target. In limited LOB mode, DMS truncates any data larger than the user defined 'Maximum LOB size' variable. The maximum recommended value for 'Maximum LOB size' is 100MB.

-

Amazon DMS does not replicate tables whose names contain apostrophes.

-

Amazon DMS supports CDC from materialized views. But DMS does not support CDC from any other views.

-

Amazon DMS does not support CDC for index-organized tables with an overflow segment.

-

Amazon DMS does not support the

Drop Partitionoperation for tables partitioned by reference withenableHomogenousPartitionOpsset totrue. -

When you use Oracle LogMiner to access the redo logs, Amazon DMS has the following limitations:

-

For Oracle 12 only, Amazon DMS does not replicate any changes to LOB columns.

-

Amazon DMS does not support XA transactions in replication while using Oracle LogMiner.

-

Oracle LogMiner does not support connections to a pluggable database (PDB). To connect to a PDB, access the redo logs using Binary Reader.

-

SHRINK SPACE operations aren’t supported.

-

-

When you use Binary Reader, Amazon DMS has these limitations:

-

It does not support table clusters.

-

It supports only table-level

SHRINK SPACEoperations. This level includes the full table, partitions, and sub-partitions. -

It does not support changes to index-organized tables with key compression.

-

It does not support implementing online redo logs on raw devices.

-

Binary Reader supports TDE only for self-managed Oracle databases since RDS for Oracle does not support wallet password retrieval for TDE encryption keys.

-

-

Amazon DMS does not support connections to an Amazon RDS Oracle source using an Oracle Automatic Storage Management (ASM) proxy.

-

Amazon DMS does not support virtual columns.

-

Amazon DMS does not support the

ROWIDdata type or materialized views based on a ROWID column.Amazon DMS has partial support for Oracle Materialized Views. For full-loads, DMS can do a full-load copy of an Oracle Materialized View. DMS copies the Materialized View as a base table to the target system and ignores any ROWID columns in the Materialized View. For ongoing replication (CDC), DMS tries to replicate changes to the Materialized View data but the results might not be ideal. Specifically, if the Materialized View is completely refreshed, DMS replicates individual deletes for all the rows, followed by individual inserts for all the rows. That is a very resource intensive exercise and might perform poorly for materialized views with large numbers of rows. For ongoing replication where the materialized views do a fast refresh, DMS tries to process and replicate the fast refresh data changes. In either case, DMS skips any ROWID columns in the materialized view.

-

Amazon DMS does not load or capture global temporary tables.

-

For S3 targets using replication, enable supplemental logging on every column so source row updates can capture every column value. An example follows:

alter table yourtablename add supplemental log data (all) columns;. -

An update for a row with a composite unique key that contains

nullcan't be replicated at the target. -

Amazon DMS does not support use of multiple Oracle TDE encryption keys on the same source endpoint. Each endpoint can have only one attribute for TDE encryption Key Name "

securityDbEncryptionName", and one TDE password for this key. -

When replicating from Amazon RDS for Oracle, TDE is supported only with encrypted tablespace and using Oracle LogMiner.

-

Amazon DMS does not support multiple table rename operations in quick succession.

-

When using Oracle 19.0 as source, Amazon DMS does not support the following features:

-

Data-guard DML redirect

-

Partitioned hybrid tables

-

Schema-only Oracle accounts

-

-

Amazon DMS does not support migration of tables or views of type

BIN$orDR$. -

Beginning with Oracle 18.x, Amazon DMS does not support change data capture (CDC) from Oracle Express Edition (Oracle Database XE).

-

When migrating data from a CHAR column, DMS truncates any trailing spaces.

-

Amazon DMS does not support replication from application containers.

-

Amazon DMS does not support performing Oracle Flashback Database and restore points, as these operations affect the consistency of Oracle Redo Log files.

-

Prior to Amazon DMS version 3.5.3, Direct-load

INSERTprocedure with the parallel execution option is not supported in the following cases:-

Uncompressed tables with more than 255 columns

-

Row size exceeds 8K

-

Exadata HCC tables

-

Database running on Big Endian platform

-

-

A source table with neither primary nor unique key requires ALL COLUMN supplemental logging to be enabled. It creates more redo log activities and may increase DMS CDC latency.

-

Amazon DMS does not migrate data from invisible columns in your source database. To include these columns in your migration scope, use the

ALTER TABLEstatement to make these columns visible. -

For all Oracle versions, Amazon DMS does not replicate the result of

UPDATEoperations onXMLTYPEand LOB columns. -

Amazon DMS does not support replication from tables with temporal validity constraints.

-

If the Oracle source becomes unavailable during a full load task, Amazon DMS might mark the task as completed after multiple reconnection attempts, even though the data migration remains incomplete. In this scenario, the target tables contain only the records migrated before the connection loss, potentially creating data inconsistencies between the source and target systems. To ensure data completeness, you must either restart the full load task entirely or reload the specific tables affected by the connection interruption.

SSL support for an Oracle endpoint

Amazon DMS Oracle endpoints support SSL V3 for the none and

verify-ca SSL modes. To use SSL with an Oracle endpoint, upload the

Oracle wallet for the endpoint instead of .pem certificate files.

Using an existing certificate for Oracle SSL

To use an existing Oracle client installation to create the Oracle wallet file from the CA certificate file, do the following steps.

To use an existing oracle client installation for Oracle SSL with Amazon DMS

-

Set the

ORACLE_HOMEsystem variable to the location of yourdbhome_1directory by running the following command.prompt>export ORACLE_HOME=/home/user/app/user/product/12.1.0/dbhome_1 -

Append

$ORACLE_HOME/libto theLD_LIBRARY_PATHsystem variable.prompt>export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$ORACLE_HOME/lib -

Create a directory for the Oracle wallet at

$ORACLE_HOME/ssl_wallet.prompt>mkdir $ORACLE_HOME/ssl_wallet -

Put the CA certificate

.pemfile in thessl_walletdirectory. If you use Amazon RDS, you can download therds-ca-2015-root.pemroot CA certificate file hosted by Amazon RDS. For more information about downloading this file, see Using SSL/TLS to encrypt a connection to a DB instance in the Amazon RDS User Guide. -

If your CA certificate contains more than one PEM file (like Amazon RDS global or regional bundle), you must split it into separate files and add them into the Oracle wallet using following bash script. This script requires two parameter inputs: the path to the CA certificate and the path to the folder of the previously created Oracle wallet.

#!/usr/bin/env bash certnum=$(grep -c BEGIN <(cat $1)) cnt=0 temp_cert="" while read line do if [ -n "$temp_cert" -a "$line" == "-----BEGIN CERTIFICATE-----" ] then cnt=$(expr $cnt + 1) printf "\rImporting certificate # $cnt of $certnum" orapki wallet add -wallet "$2" -trusted_cert -cert <(echo -n "${temp_cert}") -auto_login_only 1>/dev/null 2>/dev/null temp_cert="" fi temp_cert+="$line"$'\n' done < <(cat $1) cnt=$(expr $cnt + 1) printf "\rImporting certificate # $cnt of $certnum" orapki wallet add -wallet "$2" -trusted_cert -cert <(echo -n "${temp_cert}") -auto_login_only 1>/dev/null 2>/dev/null echo ""

When you have completed the steps previous, you can import the wallet file

with the ImportCertificate API call by specifying the

certificate-wallet parameter. You can then use the imported wallet certificate

when you select verify-ca as the SSL mode when creating or

modifying your Oracle endpoint.

Note

Oracle wallets are binary files. Amazon DMS accepts these files as-is.

Using a self-signed certificate for Oracle SSL

To use a self-signed certificate for Oracle SSL, do the steps following,

assuming an Oracle wallet password of oracle123.

To use a self-signed certificate for Oracle SSL with Amazon DMS

-

Create a directory you will use to work with the self-signed certificate.

mkdir -p /u01/app/oracle/self_signed_cert -

Change into the directory you created in the previous step.

cd /u01/app/oracle/self_signed_cert -

Create a root key.

openssl genrsa -out self-rootCA.key 2048 -

Self-sign a root certificate using the root key you created in the previous step.

openssl req -x509 -new -nodes -key self-rootCA.key -sha256 -days 3650 -out self-rootCA.pemUse input parameters like the following.

-

Country Name (2 letter code) [XX], for example:AU -

State or Province Name (full name) [], for example:NSW -

Locality Name (e.g., city) [Default City], for example:Sydney -

Organization Name (e.g., company) [Default Company Ltd], for example:AmazonWebService -

Organizational Unit Name (e.g., section) [], for example:DBeng -

Common Name (e.g., your name or your server's hostname) [], for example:aws -

Email Address [], for example: abcd.efgh@amazonwebservice.com

-

-

Create an Oracle wallet directory for the Oracle database.

mkdir -p /u01/app/oracle/wallet -

Create a new Oracle wallet.

orapki wallet create -wallet "/u01/app/oracle/wallet" -pwd oracle123 -auto_login_local -

Add the root certificate to the Oracle wallet.

orapki wallet add -wallet "/u01/app/oracle/wallet" -pwd oracle123 -trusted_cert -cert /u01/app/oracle/self_signed_cert/self-rootCA.pem -

List the contents of the Oracle wallet. The list should include the root certificate.

orapki wallet display -wallet /u01/app/oracle/wallet -pwd oracle123For example, this might display similar to the following.

Requested Certificates: User Certificates: Trusted Certificates: Subject: CN=aws,OU=DBeng,O= AmazonWebService,L=Sydney,ST=NSW,C=AU -

Generate the Certificate Signing Request (CSR) using the ORAPKI utility.

orapki wallet add -wallet "/u01/app/oracle/wallet" -pwd oracle123 -dn "CN=aws" -keysize 2048 -sign_alg sha256 -

Run the following command.

openssl pkcs12 -in /u01/app/oracle/wallet/ewallet.p12 -nodes -out /u01/app/oracle/wallet/nonoracle_wallet.pemThis has output like the following.

Enter Import Password: MAC verified OK Warning unsupported bag type: secretBag -

Put 'dms' as the common name.

openssl req -new -key /u01/app/oracle/wallet/nonoracle_wallet.pem -out certdms.csrUse input parameters like the following.

-

Country Name (2 letter code) [XX], for example:AU -

State or Province Name (full name) [], for example:NSW -

Locality Name (e.g., city) [Default City], for example:Sydney -

Organization Name (e.g., company) [Default Company Ltd], for example:AmazonWebService -

Organizational Unit Name (e.g., section) [], for example:aws -

Common Name (e.g., your name or your server's hostname) [], for example:aws -

Email Address [], for example: abcd.efgh@amazonwebservice.com

Make sure this is not same as step 4. You can do this, for example, by changing Organizational Unit Name to a different name as shown.

Enter the additional attributes following to be sent with your certificate request.

-

A challenge password [], for example:oracle123 -

An optional company name [], for example:aws

-

-

Get the certificate signature.

openssl req -noout -text -in certdms.csr | grep -i signatureThe signature key for this post is

sha256WithRSAEncryption. -

Run the command following to generate the certificate (

.crt) file.openssl x509 -req -in certdms.csr -CA self-rootCA.pem -CAkey self-rootCA.key -CAcreateserial -out certdms.crt -days 365 -sha256This displays output like the following.

Signature ok subject=/C=AU/ST=NSW/L=Sydney/O=awsweb/OU=DBeng/CN=aws Getting CA Private Key -

Add the certificate to the wallet.

orapki wallet add -wallet /u01/app/oracle/wallet -pwd oracle123 -user_cert -cert certdms.crt -

View the wallet. It should have two entries. See the code following.

orapki wallet display -wallet /u01/app/oracle/wallet -pwd oracle123 -

Configure the

sqlnet.orafile ($ORACLE_HOME/network/admin/sqlnet.ora).WALLET_LOCATION = (SOURCE = (METHOD = FILE) (METHOD_DATA = (DIRECTORY = /u01/app/oracle/wallet/) ) ) SQLNET.AUTHENTICATION_SERVICES = (NONE) SSL_VERSION = 1.0 SSL_CLIENT_AUTHENTICATION = FALSE SSL_CIPHER_SUITES = (SSL_RSA_WITH_AES_256_CBC_SHA) -

Stop the Oracle listener.

lsnrctl stop -

Add entries for SSL in the

listener.orafile ($ORACLE_HOME/network/admin/listener.ora).SSL_CLIENT_AUTHENTICATION = FALSE WALLET_LOCATION = (SOURCE = (METHOD = FILE) (METHOD_DATA = (DIRECTORY = /u01/app/oracle/wallet/) ) ) SID_LIST_LISTENER = (SID_LIST = (SID_DESC = (GLOBAL_DBNAME =SID) (ORACLE_HOME =ORACLE_HOME) (SID_NAME =SID) ) ) LISTENER = (DESCRIPTION_LIST = (DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = localhost.localdomain)(PORT = 1521)) (ADDRESS = (PROTOCOL = TCPS)(HOST = localhost.localdomain)(PORT = 1522)) (ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC1521)) ) ) -

Configure the

tnsnames.orafile ($ORACLE_HOME/network/admin/tnsnames.ora).<SID>= (DESCRIPTION= (ADDRESS_LIST = (ADDRESS=(PROTOCOL = TCP)(HOST = localhost.localdomain)(PORT = 1521)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = <SID>) ) ) <SID>_ssl= (DESCRIPTION= (ADDRESS_LIST = (ADDRESS=(PROTOCOL = TCPS)(HOST = localhost.localdomain)(PORT = 1522)) ) (CONNECT_DATA = (SERVER = DEDICATED) (SERVICE_NAME = <SID>) ) ) -

Restart the Oracle listener.

lsnrctl start -

Show the Oracle listener status.

lsnrctl status -

Test the SSL connection to the database from localhost using sqlplus and the SSL tnsnames entry.

sqlplus -LORACLE_USER@SID_ssl -

Verify that you successfully connected using SSL.

SELECT SYS_CONTEXT('USERENV', 'network_protocol') FROM DUAL; SYS_CONTEXT('USERENV','NETWORK_PROTOCOL') -------------------------------------------------------------------------------- tcps -

Change directory to the directory with the self-signed certificate.

cd /u01/app/oracle/self_signed_cert -

Create a new client Oracle wallet for Amazon DMS to use.

orapki wallet create -wallet ./ -auto_login_only -

Add the self-signed root certificate to the Oracle wallet.

orapki wallet add -wallet ./ -trusted_cert -cert self-rootCA.pem -auto_login_only -

List the contents of the Oracle wallet for Amazon DMS to use. The list should include the self-signed root certificate.

orapki wallet display -wallet ./This has output like the following.

Trusted Certificates: Subject: CN=aws,OU=DBeng,O=AmazonWebService,L=Sydney,ST=NSW,C=AU -

Upload the Oracle wallet that you just created to Amazon DMS.

Supported encryption methods for using Oracle as a source for Amazon DMS

In the following table, you can find the transparent data encryption (TDE) methods that Amazon DMS supports when working with an Oracle source database.

| Redo logs access method | TDE tablespace | TDE column |

|---|---|---|

| Oracle LogMiner | Yes | Yes |

| Binary Reader | Yes | Yes |

Amazon DMS supports Oracle TDE when using Binary Reader, on both the column level and the tablespace level. To use TDE encryption with Amazon DMS, first identify the Oracle wallet location where the TDE encryption key and TDE password are stored. Then identify the correct TDE encryption key and password for your Oracle source endpoint.

To identify and specify encryption key and password for TDE encryption

-

Run the following query to find the Oracle encryption wallet on the Oracle database host.

SQL> SELECT WRL_PARAMETER FROM V$ENCRYPTION_WALLET; WRL_PARAMETER -------------------------------------------------------------------------------- /u01/oracle/product/12.2.0/dbhome_1/data/wallet/Here,

/u01/oracle/product/12.2.0/dbhome_1/data/wallet/is the wallet location. -

Get the master key ID for either Non-CDB or CDB source as follows:

-

For non-CDB source run the following query to retrieve Master encryption key ID:

SQL> select rownum, key_id, activation_time from v$encryption_keys; ROWNUM KEY_ID ACTIVATION_TIME ------ ------------------------------------------------------ --------------- 1 AeKask0XZU+NvysflCYBEVwAAAAAAAAAAAAAAAAAAAAAAAAAAAAA 04-SEP-24 10.20.56.605200 PM +00:00 2 AV7WU9uhoU8rv8daE/HNnSwAAAAAAAAAAAAAAAAAAAAAAAAAAAAA 10-AUG-21 07.52.03.966362 PM +00:00 3 AckpoJ/f+k8xvzJ+gSuoVH4AAAAAAAAAAAAAAAAAAAAAAAAAAAAA 14-SEP-20 09.26.29.048870 PM +00:00Activation time is useful if you plan to start CDC from some point in the past. For example, using the above results, you can start CDC from some point between 10-AUG-21 07.52.03 PM and 14-SEP-20 09.26.29 PM using the Master Key ID in ROWNUM 2. When the task reaches the redo generated on or after 14-SEP-20 09.26.29 PM it fails, you must modify the source endpoint, provide the Master key ID in ROWNUM 3, and then resume the task.

-

For CDB source DMS requires CDB$ROOT Master encryption key. Connect to CDB$ROOT and execute the following query:

SQL> select rownum, key_id, activation_time from v$encryption_keys where con_id = 1; ROWNUM KEY_ID ACTIVATION_TIME ------ ---------------------------------------------------- ----------------------------------- 1 Aa2E/Vwb5U+zv5hCncS5ErMAAAAAAAAAAAAAAAAAAAAAAAAAAAAA 29-AUG-24 12.51.19.699060 AM +00:00

-

-

From the command line, list the encryption wallet entries on the source Oracle database host.

$ mkstore -wrl /u01/oracle/product/12.2.0/dbhome_1/data/wallet/ -list Oracle Secret Store entries: ORACLE.SECURITY.DB.ENCRYPTION.AWGDC9glSk8Xv+3bVveiVSgAAAAAAAAAAAAAAAAAAAAAAAAAAAAA ORACLE.SECURITY.DB.ENCRYPTION.AY1mRA8OXU9Qvzo3idU4OH4AAAAAAAAAAAAAAAAAAAAAAAAAAAAA ORACLE.SECURITY.DB.ENCRYPTION.MASTERKEY ORACLE.SECURITY.ID.ENCRYPTION. ORACLE.SECURITY.KB.ENCRYPTION. ORACLE.SECURITY.KM.ENCRYPTION.AY1mRA8OXU9Qvzo3idU4OH4AAAAAAAAAAAAAAAAAAAAAAAAAAAAAFind the entry containing the master key ID that you found in step 2 (

AWGDC9glSk8Xv+3bVveiVSg). This entry is the TDE encryption key name. -

View the details of the entry that you found in the previous step.

$ mkstore -wrl /u01/oracle/product/12.2.0/dbhome_1/data/wallet/ -viewEntry ORACLE.SECURITY.DB.ENCRYPTION.AWGDC9glSk8Xv+3bVveiVSgAAAAAAAAAAAAAAAAAAAAAAAAAAAAA Oracle Secret Store Tool : Version 12.2.0.1.0 Copyright (c) 2004, 2016, Oracle and/or its affiliates. All rights reserved. Enter wallet password: ORACLE.SECURITY.DB.ENCRYPTION.AWGDC9glSk8Xv+3bVveiVSgAAAAAAAAAAAAAAAAAAAAAAAAAAAAA = AEMAASAASGYs0phWHfNt9J5mEMkkegGFiD4LLfQszDojgDzbfoYDEACv0x3pJC+UGD/PdtE2jLIcBQcAeHgJChQGLA==Enter the wallet password to see the result.

Here, the value to the right of

'='is the TDE password. -

Specify the TDE encryption key name for the Oracle source endpoint by setting the

securityDbEncryptionNameextra connection attribute.securityDbEncryptionName=ORACLE.SECURITY.DB.ENCRYPTION.AWGDC9glSk8Xv+3bVveiVSgAAAAAAAAAAAAAAAAAAAAAAAAAAAAA -

Provide the associated TDE password for this key on the console as part of the Oracle source's Password value. Use the following order to format the comma-separated password values, ended by the TDE password value.

Oracle_db_password,ASM_Password,AEMAASAASGYs0phWHfNt9J5mEMkkegGFiD4LLfQszDojgDzbfoYDEACv0x3pJC+UGD/PdtE2jLIcBQcAeHgJChQGLA==Specify the password values in this order regardless of your Oracle database configuration. For example, if you're using TDE but your Oracle database isn't using ASM, specify password values in the following comma-separated order.

Oracle_db_password,,AEMAASAASGYs0phWHfNt9J5mEMkkegGFiD4LLfQszDojgDzbfoYDEACv0x3pJC+UGD/PdtE2jLIcBQcAeHgJChQGLA==

If the TDE credentials you specify are incorrect, the Amazon DMS migration task does not fail. However, the task also does not read or apply ongoing replication changes to the target database. After starting the task, monitor Table statistics on the console migration task page to make sure changes are replicated.

If a DBA changes the TDE credential values for the Oracle database while the task is running, the task fails. The error message contains the new TDE encryption key name. To specify new values and restart the task, use the preceding procedure.

Important

You can’t manipulate a TDE wallet created in an Oracle Automatic Storage Management (ASM)

location because OS level commands like cp, mv, orapki,

and mkstore corrupt the wallet files stored in an ASM location. This restriction is

specific to TDE wallet files stored in an ASM location only, but not for TDE wallet files stored in a

local OS directory.

To manipulate a TDE wallet stored in ASM with OS level commands, create a local keystore and merge the ASM keystore into the local keystore as follows:

-

Create a local keystore.

ADMINISTER KEY MANAGEMENT create keystorefile system wallet locationidentified bywallet password; -

Merge the ASM keystore into the local keystore.

ADMINISTER KEY MANAGEMENT merge keystoreASM wallet locationidentified bywallet passwordinto existing keystorefile system wallet locationidentified bywallet passwordwith backup;

Then, to list the encryption wallet entries and TDE password, run steps 3 and 4 against the local keystore.

Supported compression methods for using Oracle as a source for Amazon DMS

In the following table, you can find which compression methods Amazon DMS supports when working with an Oracle source database. As the table shows, compression support depends both on your Oracle database version and whether DMS is configured to use Oracle LogMiner to access the redo logs.

| Version | Basic | OLTP |

HCC (from Oracle 11g R2 or newer) |

Others |

|---|---|---|---|---|

| Oracle 10 | No | N/A | N/A | No |

| Oracle 11 or newer – Oracle LogMiner | Yes | Yes | Yes | Yes – Any compression method supported by Oracle LogMiner. |

| Oracle 11 or newer – Binary Reader | Yes | Yes | Yes – For more information, see the following note . | Yes |

Note

When the Oracle source endpoint is configured to use Binary Reader, the Query Low level of the HCC compression method is supported for full-load tasks only.

Replicating nested tables using Oracle as a source for Amazon DMS

Amazon DMS supports the replication of Oracle tables containing columns that are nested tables or defined types. To enable this functionality, add the following extra connection attribute setting to the Oracle source endpoint.

allowSelectNestedTables=true;

Amazon DMS creates the target tables from Oracle nested tables as regular parent and

child tables on the target without a unique constraint. To access the correct data

on the target, join the parent and child tables. To do this, first manually create a

nonunique index on the NESTED_TABLE_ID column in the target child

table. You can then use the NESTED_TABLE_ID column in the join

ON clause together with the parent column that corresponds to the

child table name. In addition, creating such an index improves performance when the

target child table data is updated or deleted by Amazon DMS. For an example, see Example join for

parent and child tables on the target.

We recommend that you configure the task to stop after a full load completes. Then, create these nonunique indexes for all the replicated child tables on the target and resume the task.

If a captured nested table is added to an existing parent table (captured or not captured), Amazon DMS handles it correctly. However, the nonunique index for the corresponding target table isn't created. In this case, if the target child table becomes extremely large, performance might be affected. In such a case, we recommend that you stop the task, create the index, then resume the task.

After the nested tables are replicated to the target, have the DBA run a join on the parent and corresponding child tables to flatten the data.

Prerequisites for replicating Oracle nested tables as a source

Ensure that you replicate parent tables for all the replicated nested tables. Include both the parent tables (the tables containing the nested table column) and the child (that is, nested) tables in the Amazon DMS table mappings.

Supported Oracle nested table types as a source

Amazon DMS supports the following Oracle nested table types as a source:

-

Data type

-

User defined object

Limitations of Amazon DMS support for Oracle nested tables as a source

Amazon DMS has the following limitations in its support of Oracle nested tables as a source:

-

Amazon DMS supports only one level of table nesting.

-

Amazon DMS table mapping does not check that both the parent and child table or tables are selected for replication. That is, it's possible to select a parent table without a child or a child table without a parent.

How Amazon DMS replicates Oracle nested tables as a source

Amazon DMS replicates parent and nested tables to the target as follows:

-

Amazon DMS creates the parent table identical to the source. It then defines the nested column in the parent as

RAW(16)and includes a reference to the parent's nested tables in itsNESTED_TABLE_IDcolumn. -

Amazon DMS creates the child table identical to the nested source, but with an additional column named

NESTED_TABLE_ID. This column has the same type and value as the corresponding parent nested column and has the same meaning.

Example join for parent and child tables on the target

To flatten the parent table, run a join between the parent and child tables, as shown in the following example:

-

Create the

Typetable.CREATE OR REPLACE TYPE NESTED_TEST_T AS TABLE OF VARCHAR(50); -

Create the parent table with a column of type

NESTED_TEST_Tas defined preceding.CREATE TABLE NESTED_PARENT_TEST (ID NUMBER(10,0) PRIMARY KEY, NAME NESTED_TEST_T) NESTED TABLE NAME STORE AS NAME_KEY; -

Flatten the table

NESTED_PARENT_TESTusing a join with theNAME_KEYchild table whereCHILD.NESTED_TABLE_IDmatchesPARENT.NAME.SELECT … FROM NESTED_PARENT_TEST PARENT, NAME_KEY CHILD WHERE CHILD.NESTED_ TABLE_ID = PARENT.NAME;

Storing REDO on Oracle ASM when using Oracle as a source for Amazon DMS

For Oracle sources with high REDO generation, storing REDO on Oracle ASM can benefit performance, especially in a RAC configuration since you can configure DMS to distribute ASM REDO reads across all ASM nodes.

To utilize this configuration, use the asmServer connection attribute. For example,

the following connection string distributes DMS REDO reads across 3 ASM nodes:

asmServer=(DESCRIPTION=(CONNECT_TIMEOUT=8)(ENABLE=BROKEN)(LOAD_BALANCE=ON)(FAILOVER=ON) (ADDRESS_LIST= (ADDRESS=(PROTOCOL=tcp)(HOST=asm_node1_ip_address)(PORT=asm_node1_port_number)) (ADDRESS=(PROTOCOL=tcp)(HOST=asm_node2_ip_address)(PORT=asm_node2_port_number)) (ADDRESS=(PROTOCOL=tcp)(HOST=asm_node3_ip_address)(PORT=asm_node3_port_number))) (CONNECT_DATA=(SERVICE_NAME=+ASM)))

When using NFS to store Oracle REDO, it’s important to make sure that applicable DNFS (direct NFS)

client patches are applied, specifically any patch addressing Oracle bug 25224242. For additional information,

review the following Oracle Publication regarding Direct NFS client related patches,

Recommended Patches for Direct NFS Client

Additionally, to improve NFS read performance, we recommended you increase the value of

rsize and wsize in fstab for the the NFS volume, as

shown in the following example.

NAS_name_here:/ora_DATA1_archive /u09/oradata/DATA1 nfs rw,bg,hard,nointr,tcp,nfsvers=3,_netdev, timeo=600,rsize=262144,wsize=262144

Also, adjust the tcp-max-xfer-size value as follows:

vserver nfs modify -vservervserver-tcp-max-xfer-size 262144