本文属于机器翻译版本。若本译文内容与英语原文存在差异,则一律以英文原文为准。

Amazon HyperPod 基本命令指南

Amazon Amazon SageMaker HyperPod 为管理培训工作流程提供了广泛的命令行功能。本指南涵盖了从连接到集群到监控任务进度等常见操作的基本命令。

先决条件

在使用这些命令之前,请确保已完成以下设置:

-

HyperPod 已创建 RIG 的集群(通常在 us-east-1 中)

-

为训练项目创建的输出 Amazon S3 存储桶

-

配置了相应权限的 IAM 角色

-

以正确的 JSONL 格式上传训练数据

-

FSx Lustre 同步已完成(第一次作业时在集群日志中进行验证)

安装配方 CLI

在运行安装命令之前,请导航到配方存储库的根目录。

如果使用非 Forge 自定义技术,请使用 SageMaker HyperPodrecipes 存储库;对于基于 Forge 的自定义,请参阅特定于 Forge 的配方存储库。

运行以下命令来安装 HyperPod CLI:

注意

确保你不在活跃的 conda/anaconda /miniconda 环境或其他虚拟环境中

如果你是,请使用以下命令退出环境:

-

conda deactivate适用于 conda/anaconda /miniconda 环境 -

deactivate适用于 python 虚拟环境

如果您使用的是 Non Forge 自定义技术,请按 sagemaker-hyperpod-recipes如下所示下载:

git clone -b release_v2 https://github.com/aws/sagemaker-hyperpod-cli.git cd sagemaker-hyperpod-cli pip install -e . cd .. root_dir=$(pwd) export PYTHONPATH=${root_dir}/sagemaker-hyperpod-cli/src/hyperpod_cli/sagemaker_hyperpod_recipes/launcher/nemo/nemo_framework_launcher/launcher_scripts:$PYTHONPATH curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 chmod 700 get_helm.sh ./get_helm.sh rm -f ./get_helm.sh

如果你是 Forge 订阅者,你应该使用下面提到的流程下载食谱。

mkdir NovaForgeHyperpodCLI cd NovaForgeHyperpodCLI aws s3 cp s3://nova-forge-c7363-206080352451-us-east-1/v1/ ./ --recursive pip install -e . curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 chmod 700 get_helm.sh ./get_helm.sh rm -f ./get_helm.sh

提示

要在运行之前使用新的虚拟环境pip install -e .,请运行:

-

python -m venv nova_forge -

source nova_forge/bin/activate -

你的命令行现在将在提示符的开头显示 (nova_forge)

-

这样可以确保在使用 CLI 时没有相互竞争的依赖关系

目的:我们为什么要这样做pip install -e .?

此命令以可编辑模式安装 HyperPod CLI,允许您使用更新的配方,而无需每次都重新安装。它还使您能够添加新配方,CLI 可以自动获取这些食谱。

连接到集群

在运行任何作业之前,请将 HyperPod CLI 连接到您的集群:

export AWS_REGION=us-east-1 && hyperpod connect-cluster --cluster-name <your-cluster-name> --region us-east-1

重要

此命令创建后续命令所需的上下文文件 (/tmp/hyperpod_context.json)。如果您看到有关未找到此文件的错误,请重新运行 connect 命令。

专业提示:您可以进一步将集群配置为始终使用kubeflow命名空间,方法是在命令中添加--namespace

kubeflow参数,如下所示:

export AWS_REGION=us-east-1 && \ hyperpod connect-cluster \ --cluster-name <your-cluster-name> \ --region us-east-1 \ --namespace kubeflow

这样可以省去在与作业交互时-n kubeflow在每个命令中添加的精力。

开始训练作业

注意

如果正在运行 PPO/RFT 作业,请确保向中添加标签选择器设置,src/hyperpod_cli/sagemaker_hyperpod_recipes/recipes_collection/cluster/k8s.yaml以便所有 Pod 都调度在同一个节点上。

label_selector: required: sagemaker.amazonaws.com/instance-group-name: - <rig_group>

使用带有可选参数覆盖的配方启动训练作业:

hyperpod start-job -n kubeflow \ --recipe fine-tuning/nova/nova_1_0/nova_micro/SFT/nova_micro_1_0_p5_p4d_gpu_lora_sft \ --override-parameters '{ "instance_type": "ml.p5.48xlarge", "container": "708977205387.dkr.ecr.us-east-1.amazonaws.com/nova-fine-tune-repo:SM-HP-SFT-latest" }'

预期输出:

Final command: python3 <path_to_your_installation>/NovaForgeHyperpodCLI/src/hyperpod_cli/sagemaker_hyperpod_recipes/main.py recipes=fine-tuning/nova/nova_micro_p5_gpu_sft cluster_type=k8s cluster=k8s base_results_dir=/local/home/<username>/results cluster.pullPolicy="IfNotPresent" cluster.restartPolicy="OnFailure" cluster.namespace="kubeflow" container="708977205387.dkr.ecr.us-east-1.amazonaws.com/nova-fine-tune-repo:HP-SFT-DATAMIX-latest" Prepared output directory at /local/home/<username>/results/<job-name>/k8s_templates Found credentials in shared credentials file: ~/.aws/credentials Helm script created at /local/home/<username>/results/<job-name>/<job-name>_launch.sh Running Helm script: /local/home/<username>/results/<job-name>/<job-name>_launch.sh NAME: <job-name> LAST DEPLOYED: Mon Sep 15 20:56:50 2025 NAMESPACE: kubeflow STATUS: deployed REVISION: 1 TEST SUITE: None Launcher successfully generated: <path_to_your_installation>/NovaForgeHyperpodCLI/src/hyperpod_cli/sagemaker_hyperpod_recipes/launcher/nova/k8s_templates/SFT { "Console URL": "https://us-east-1.console.aws.amazon.com/sagemaker/home?region=us-east-1#/cluster-management/<your-cluster-name>" }

检查作业状态

使用 kubectl 监控你正在运行的作业:

kubectl get pods -o wide -w -n kubeflow | (head -n1 ; grep <your-job-name>)

了解 Pod 状态

下表说明了常见的 pod 状态:

Status |

说明 |

|---|---|

|

Pod 已接受但尚未调度到节点上,或者正在等待拉取容器镜像 |

|

Pod 绑定到至少有一个容器正在运行或正在启动的节点 |

|

所有容器都成功完成且无法重启 |

|

所有容器都已终止,至少有一个容器以失败告终 |

|

无法确定 Pod 状态(通常是由于节点通信问题所致) |

|

容器反复失败;Kubernetes 从重启尝试中退缩 |

|

无法从注册表中提取容器镜像 |

|

容器因超出内存限制而终止 |

|

Job 或 Pod 成功完成(批处理作业完成) |

提示

使用该-w标志实时观看 pod 状态更新。按下Ctrl+C可停止观看。

监控作业日志

您可以通过以下三种方式之一查看日志:

创建 MLflow应用程序

Amazon CLI命令示例

aws sagemaker-mlflow create-mlflow-app \ --name <app-name> \ --artifact-store-uri <s3-bucket-name> \ --role-arn <role-arn> \ --region <region-name>

输出示例

{ "Arn": "arn:aws:sagemaker:us-east-1:111122223333:mlflow-app/app-LGZEOZ2UY4NZ" }

生成预签名 URL

Amazon CLI命令示例

aws sagemaker-mlflow create-presigned-mlflow-app-url \ --arn <app-arn> \ --region <region-name> \ --output text

输出示例

https://app-LGZEOZ2UY4NZ.mlflow.sagemaker.us-east-1.app.aws/auth?authToken=eyJhbGciOiJIUzI1NiJ9.eyJhdXRoVG9rZW5JZCI6IkxETVBPUyIsImZhc0NyZWRlbnRpYWxzIjoiQWdWNGhDM1VvZ0VYSUVsT2lZOVlLNmxjRHVxWm1BMnNhZ3JDWEd3aFpOSmdXbzBBWHdBQkFCVmhkM010WTNKNWNIUnZMWEIxWW14cFl5MXJaWGtBUkVFd09IQmtVbU5IUzJJMU1VTnVaVEl3UVhkUE5uVm9Ra2xHTkZsNVRqTTNXRVJuTTNsalowNHhRVFZvZERneVdrMWlkRlZXVWpGTWMyWlRUV1JQWmpSS2R6MDlBQUVBQjJGM2N5MXJiWE1BUzJGeWJqcGhkM002YTIxek9uVnpMV1ZoYzNRdE1Ub3pNVFF4TkRZek1EWTBPREk2YTJWNUx6Y3dOMkpoTmpjeExUUXpZamd0TkRFeU5DMWhaVFUzTFRrMFlqTXdZbUptT1RJNU13QzRBUUlCQUhnQjRVMDBTK3ErVE51d1gydlFlaGtxQnVneWQ3YnNrb0pWdWQ2NmZjVENVd0ZzRTV4VHRGVllHUXdxUWZoeXE2RkJBQUFBZmpCOEJna3Foa2lHOXcwQkJ3YWdiekJ0QWdFQU1HZ0dDU3FHU0liM0RRRUhBVEFlQmdsZ2hrZ0JaUU1FQVM0d0VRUU1yOEh4MXhwczFBbmEzL1JKQWdFUWdEdTI0K1M5c2VOUUNFV0hJRXJwdmYxa25MZTJteitlT29pTEZYNTJaeHZsY3AyZHFQL09tY3RJajFqTWFuRjMxZkJyY004MmpTWFVmUHRhTWdJQUFCQUE3L1pGT05DRi8rWnVPOVlCVnhoaVppSEFSLy8zR1I0TmR3QWVxcDdneHNkd2lwTDJsVWdhU3ZGNVRCbW9uMUJnLy8vLy93QUFBQUVBQUFBQUFBQUFBQUFBQUFFQUFBUTdBMHN6dUhGbEs1NHdZbmZmWEFlYkhlNmN5OWpYOGV3T2x1NWhzUWhGWFllRXNVaENaQlBXdlQrVWp5WFY0ZHZRNE8xVDJmNGdTRUFOMmtGSUx0YitQa0tmM0ZUQkJxUFNUQWZ3S1oyeHN6a1lDZXdwRlNpalFVTGtxemhXbXBVcmVDakJCOHNGT3hQL2hjK0JQalY3bUhOL29qcnVOejFhUHhjNSt6bHFuak9CMHljYy8zL2JuSHA3NVFjRE8xd2NMbFJBdU5KZ2RMNUJMOWw1YVVPM0FFMlhBYVF3YWY1bkpwTmZidHowWUtGaWZHMm94SDJSNUxWSjNkbG40aGVRbVk4OTZhdXdsellQV253N2lTTDkvTWNidDAzdVZGN0JpUnRwYmZMN09JQm8wZlpYSS9wK1pUNWVUS2wzM2tQajBIU3F6NisvamliY0FXMWV4VTE4N1QwNHpicTNRcFhYMkhqcDEvQnFnMVdabkZoaEwrekZIaUV0Qjd4U1RaZkZsS2xRUUhNK0ZkTDNkOHIyRWhCMjFya2FBUElIQVBFUk5Pd1lnNmFzM2pVaFRwZWtuZVhxSDl3QzAyWU15R0djaTVzUEx6ejh3ZTExZVduanVTai9DZVJpZFQ1akNRcjdGMUdKWjBVREZFbnpNakFuL3Y3ajA5c2FMczZnemlCc2FLQXZZOWpib0JEYkdKdGZ0N2JjVjl4eUp4amptaW56TGtoVG5pV2dxV3g5MFZPUHlWNWpGZVk1QTFrMmw3bDArUjZRTFNleHg4d1FrK0FqVGJuLzFsczNHUTBndUtESmZKTWVGUVczVEVrdkp5VlpjOC9xUlpIODhybEpKOW1FSVdOd1BMU21yY1l6TmZwVTlVOGdoUDBPUWZvQ3FvcW1WaUhEYldaT294bGpmb295cS8yTDFKNGM3NTJUaVpFd1hnaG9haFBYdGFjRnA2NTVUYjY5eGxTN25FaXZjTTlzUjdTT3REMEMrVHIyd0cxNEJ3Zm9NZTdKOFhQeVRtcmQ0QmNKOEdOYnVZTHNRNU9DcFlsV3pVNCtEcStEWUI4WHk1UWFzaDF0dzJ6dGVjVVQyc0hsZmwzUVlrQ0d3Z1hWam5Ia2hKVitFRDIrR3Fpc3BkYjRSTC83RytCRzRHTWNaUE02Q3VtTFJkMnZLbnozN3dUWkxwNzdZNTdMQlJySm9Tak9idWdNUWdhOElLNnpWL2VtcFlSbXJsVjZ5VjZ6S1h5aXFKWFk3TTBXd3dSRzd5Q0xYUFRtTGt3WGE5cXF4NkcvZDY1RS83V3RWMVUrNFIxMlZIUmVUMVJmeWw2SnBmL2FXWFVCbFQ2ampUR0M5TU1uTk5OVTQwZHRCUTArZ001S1d2WGhvMmdmbnhVcU1OdnFHblRFTWdZMG5ZL1FaM0RWNFozWUNqdkFOVWVsS1NCdkxFbnY4SEx0WU9uajIrTkRValZOV1h5T1c4WFowMFFWeXU0ZU5LaUpLQ1hJbnI1N3RrWHE3WXl3b0lZV0hKeHQwWis2MFNQMjBZZktYYlhHK1luZ3F6NjFqMkhIM1RQUmt6dW5rMkxLbzFnK1ZDZnhVWFByeFFmNUVyTm9aT2RFUHhjaklKZ1FxRzJ2eWJjbFRNZ0M5ZXc1QURVcE9KL1RrNCt2dkhJMDNjM1g0UXcrT3lmZHFUUzJWb3N4Y0hJdG5iSkZmdXliZi9lRlZWRlM2L3lURkRRckhtQ1RZYlB3VXlRNWZpR20zWkRhNDBQUTY1RGJSKzZSbzl0S3c0eWFlaXdDVzYwZzFiNkNjNUhnQm5GclMyYytFbkNEUFcrVXRXTEF1azlISXZ6QnR3MytuMjdRb1cvSWZmamJucjVCSXk3MDZRTVR4SzhuMHQ3WUZuMTBGTjVEWHZiZzBvTnZuUFFVYld1TjhFbE11NUdpenZxamJmeVZRWXdBSERCcDkzTENsUUJuTUdVQ01GWkNHUGRPazJ2ZzJoUmtxcWQ3SmtDaEpiTmszSVlyanBPL0h2Z2NZQ2RjK2daM3lGRjMyTllBMVRYN1FXUkJYZ0l4QU5xU21ZTHMyeU9uekRFenBtMUtnL0tvYmNqRTJvSDJkZHcxNnFqT0hRSkhkVWRhVzlZL0NQYTRTbWxpN2pPbGdRPT0iLCJjaXBoZXJUZXh0IjoiQVFJQkFIZ0I0VTAwUytxK1ROdXdYMnZRZWhrcUJ1Z3lkN2Jza29KVnVkNjZmY1RDVXdHeDExRlBFUG5xU1ZFbE5YVUNrQnRBQUFBQW9qQ0Jud1lKS29aSWh2Y05BUWNHb0lHUk1JR09BZ0VBTUlHSUJna3Foa2lHOXcwQkJ3RXdIZ1lKWUlaSUFXVURCQUV1TUJFRURHemdQNnJFSWNEb2dWSTl1d0lCRUlCYitXekkvbVpuZkdkTnNYV0VCM3Y4NDF1SVJUNjBLcmt2OTY2Q1JCYmdsdXo1N1lMTnZUTkk4MEdkVXdpYVA5NlZwK0VhL3R6aGgxbTl5dzhjcWdCYU1pOVQrTVQxdzdmZW5xaXFpUnRRMmhvN0tlS2NkMmNmK3YvOHVnPT0iLCJzdWIiOiJhcm46YXdzOnNhZ2VtYWtlcjp1cy1lYXN0LTE6MDYwNzk1OTE1MzUzOm1sZmxvdy1hcHAvYXBwLUxHWkVPWjJVWTROWiIsImlhdCI6MTc2NDM2NDYxNSwiZXhwIjoxNzY0MzY0OTE1fQ.HNvZOfqft4m7pUS52MlDwoi1BA8Vsj3cOfa_CvlT4uw

打开预签名 URL 并查看应用程序

单击

https://app-LGZEOZ2UY4NZ.mlflow.sagemaker.us-east-1.app.aws/auth?authToken=eyJhbGciOiJIUzI1NiJ9.eyJhdXRoVG9rZW5JZCI6IkxETVBPUyIsImZhc0NyZWRlbnRpYWxzIjoiQWdWNGhDM1VvZ0VYSUVsT2lZOVlLNmxjRHVxWm1BMnNhZ3JDWEd3aFpOSmdXbzBBWHdBQkFCVmhkM010WTNKNWNIUnZMWEIxWW14cFl5MXJaWGtBUkVFd09IQmtVbU5IUzJJMU1VTnVaVEl3UVhkUE5uVm9Ra2xHTkZsNVRqTTNXRVJuTTNsalowNHhRVFZvZERneVdrMWlkRlZXVWpGTWMyWlRUV1JQWmpSS2R6MDlBQUVBQjJGM2N5MXJiWE1BUzJGeWJqcGhkM002YTIxek9uVnpMV1ZoYzNRdE1Ub3pNVFF4TkRZek1EWTBPREk2YTJWNUx6Y3dOMkpoTmpjeExUUXpZamd0TkRFeU5DMWhaVFUzTFRrMFlqTXdZbUptT1RJNU13QzRBUUlCQUhnQjRVMDBTK3ErVE51d1gydlFlaGtxQnVneWQ3YnNrb0pWdWQ2NmZjVENVd0ZzRTV4VHRGVllHUXdxUWZoeXE2RkJBQUFBZmpCOEJna3Foa2lHOXcwQkJ3YWdiekJ0QWdFQU1HZ0dDU3FHU0liM0RRRUhBVEFlQmdsZ2hrZ0JaUU1FQVM0d0VRUU1yOEh4MXhwczFBbmEzL1JKQWdFUWdEdTI0K1M5c2VOUUNFV0hJRXJwdmYxa25MZTJteitlT29pTEZYNTJaeHZsY3AyZHFQL09tY3RJajFqTWFuRjMxZkJyY004MmpTWFVmUHRhTWdJQUFCQUE3L1pGT05DRi8rWnVPOVlCVnhoaVppSEFSLy8zR1I0TmR3QWVxcDdneHNkd2lwTDJsVWdhU3ZGNVRCbW9uMUJnLy8vLy93QUFBQUVBQUFBQUFBQUFBQUFBQUFFQUFBUTdBMHN6dUhGbEs1NHdZbmZmWEFlYkhlNmN5OWpYOGV3T2x1NWhzUWhGWFllRXNVaENaQlBXdlQrVWp5WFY0ZHZRNE8xVDJmNGdTRUFOMmtGSUx0YitQa0tmM0ZUQkJxUFNUQWZ3S1oyeHN6a1lDZXdwRlNpalFVTGtxemhXbXBVcmVDakJCOHNGT3hQL2hjK0JQalY3bUhOL29qcnVOejFhUHhjNSt6bHFuak9CMHljYy8zL2JuSHA3NVFjRE8xd2NMbFJBdU5KZ2RMNUJMOWw1YVVPM0FFMlhBYVF3YWY1bkpwTmZidHowWUtGaWZHMm94SDJSNUxWSjNkbG40aGVRbVk4OTZhdXdsellQV253N2lTTDkvTWNidDAzdVZGN0JpUnRwYmZMN09JQm8wZlpYSS9wK1pUNWVUS2wzM2tQajBIU3F6NisvamliY0FXMWV4VTE4N1QwNHpicTNRcFhYMkhqcDEvQnFnMVdabkZoaEwrekZIaUV0Qjd4U1RaZkZsS2xRUUhNK0ZkTDNkOHIyRWhCMjFya2FBUElIQVBFUk5Pd1lnNmFzM2pVaFRwZWtuZVhxSDl3QzAyWU15R0djaTVzUEx6ejh3ZTExZVduanVTai9DZVJpZFQ1akNRcjdGMUdKWjBVREZFbnpNakFuL3Y3ajA5c2FMczZnemlCc2FLQXZZOWpib0JEYkdKdGZ0N2JjVjl4eUp4amptaW56TGtoVG5pV2dxV3g5MFZPUHlWNWpGZVk1QTFrMmw3bDArUjZRTFNleHg4d1FrK0FqVGJuLzFsczNHUTBndUtESmZKTWVGUVczVEVrdkp5VlpjOC9xUlpIODhybEpKOW1FSVdOd1BMU21yY1l6TmZwVTlVOGdoUDBPUWZvQ3FvcW1WaUhEYldaT294bGpmb295cS8yTDFKNGM3NTJUaVpFd1hnaG9haFBYdGFjRnA2NTVUYjY5eGxTN25FaXZjTTlzUjdTT3REMEMrVHIyd0cxNEJ3Zm9NZTdKOFhQeVRtcmQ0QmNKOEdOYnVZTHNRNU9DcFlsV3pVNCtEcStEWUI4WHk1UWFzaDF0dzJ6dGVjVVQyc0hsZmwzUVlrQ0d3Z1hWam5Ia2hKVitFRDIrR3Fpc3BkYjRSTC83RytCRzRHTWNaUE02Q3VtTFJkMnZLbnozN3dUWkxwNzdZNTdMQlJySm9Tak9idWdNUWdhOElLNnpWL2VtcFlSbXJsVjZ5VjZ6S1h5aXFKWFk3TTBXd3dSRzd5Q0xYUFRtTGt3WGE5cXF4NkcvZDY1RS83V3RWMVUrNFIxMlZIUmVUMVJmeWw2SnBmL2FXWFVCbFQ2ampUR0M5TU1uTk5OVTQwZHRCUTArZ001S1d2WGhvMmdmbnhVcU1OdnFHblRFTWdZMG5ZL1FaM0RWNFozWUNqdkFOVWVsS1NCdkxFbnY4SEx0WU9uajIrTkRValZOV1h5T1c4WFowMFFWeXU0ZU5LaUpLQ1hJbnI1N3RrWHE3WXl3b0lZV0hKeHQwWis2MFNQMjBZZktYYlhHK1luZ3F6NjFqMkhIM1RQUmt6dW5rMkxLbzFnK1ZDZnhVWFByeFFmNUVyTm9aT2RFUHhjaklKZ1FxRzJ2eWJjbFRNZ0M5ZXc1QURVcE9KL1RrNCt2dkhJMDNjM1g0UXcrT3lmZHFUUzJWb3N4Y0hJdG5iSkZmdXliZi9lRlZWRlM2L3lURkRRckhtQ1RZYlB3VXlRNWZpR20zWkRhNDBQUTY1RGJSKzZSbzl0S3c0eWFlaXdDVzYwZzFiNkNjNUhnQm5GclMyYytFbkNEUFcrVXRXTEF1azlISXZ6QnR3MytuMjdRb1cvSWZmamJucjVCSXk3MDZRTVR4SzhuMHQ3WUZuMTBGTjVEWHZiZzBvTnZuUFFVYld1TjhFbE11NUdpenZxamJmeVZRWXdBSERCcDkzTENsUUJuTUdVQ01GWkNHUGRPazJ2ZzJoUmtxcWQ3SmtDaEpiTmszSVlyanBPL0h2Z2NZQ2RjK2daM3lGRjMyTllBMVRYN1FXUkJYZ0l4QU5xU21ZTHMyeU9uekRFenBtMUtnL0tvYmNqRTJvSDJkZHcxNnFqT0hRSkhkVWRhVzlZL0NQYTRTbWxpN2pPbGdRPT0iLCJjaXBoZXJUZXh0IjoiQVFJQkFIZ0I0VTAwUytxK1ROdXdYMnZRZWhrcUJ1Z3lkN2Jza29KVnVkNjZmY1RDVXdHeDExRlBFUG5xU1ZFbE5YVUNrQnRBQUFBQW9qQ0Jud1lKS29aSWh2Y05BUWNHb0lHUk1JR09BZ0VBTUlHSUJna3Foa2lHOXcwQkJ3RXdIZ1lKWUlaSUFXVURCQUV1TUJFRURHemdQNnJFSWNEb2dWSTl1d0lCRUlCYitXekkvbVpuZkdkTnNYV0VCM3Y4NDF1SVJUNjBLcmt2OTY2Q1JCYmdsdXo1N1lMTnZUTkk4MEdkVXdpYVA5NlZwK0VhL3R6aGgxbTl5dzhjcWdCYU1pOVQrTVQxdzdmZW5xaXFpUnRRMmhvN0tlS2NkMmNmK3YvOHVnPT0iLCJzdWIiOiJhcm46YXdzOnNhZ2VtYWtlcjp1cy1lYXN0LTE6MDYwNzk1OTE1MzUzOm1sZmxvdy1hcHAvYXBwLUxHWkVPWjJVWTROWiIsImlhdCI6MTc2NDM2NDYxNSwiZXhwIjoxNzY0MzY0OTE1fQ.HNvZOfqft4m7pUS52MlDwoi1BA8Vsj3cOfa_CvlT4uw

视图

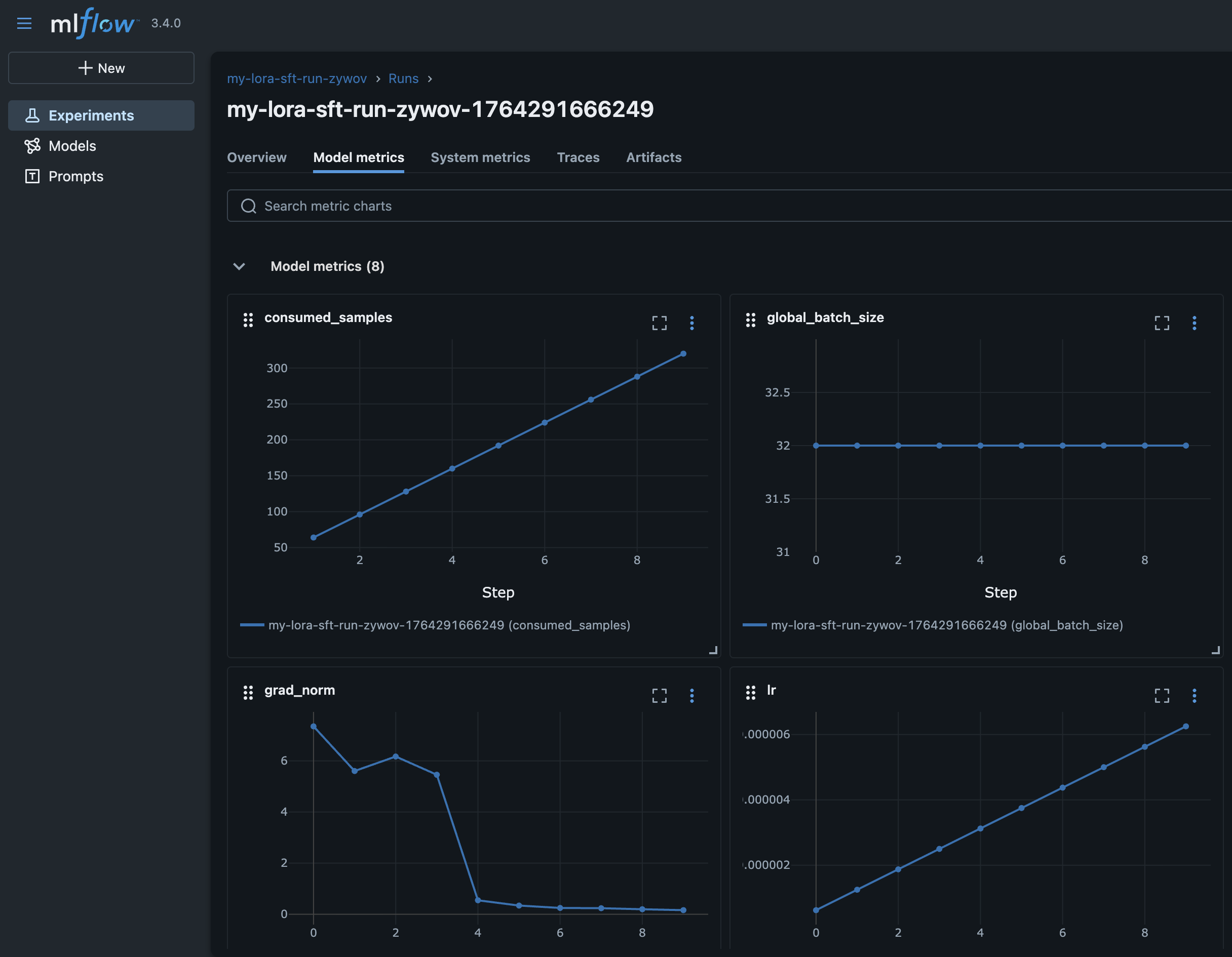

在食谱的运行块下传递到 HyperPod 食谱

指南

run mlflow_tracking_uri: arn:aws:sagemaker:us-east-1:111122223333:mlflow-app/app-LGZEOZ2UY4NZ

视图

使用 CloudWatch

您的日志可在包含其下 CloudWatch的 hyperpod 集群的Amazon账户中找到。要在浏览器中查看它们,请导航到您账户 CloudWatch 的主页并搜索您的集群名称。例如,如果您的集群被称为my-hyperpod-rig该群集,则日志组的前缀将为:

-

日志组:

/aws/sagemaker/Clusters/my-hyperpod-rig/{UUID} -

进入日志组后,您可以使用节点实例 ID 来查找您的特定日志,例如-

hyperpod-i-00b3d8a1bf25714e4。-

i-00b3d8a1bf25714e4此处表示您的训练作业正在运行的 Hyperpod 友好型计算机名称。回想一下在之前的命令kubectl get pods -o wide -w -n kubeflow | (head -n1 ; grep my-cpt-run)输出中,我们是如何捕获一个名为 NODE 的列的。 -

在本例中,“主” 节点运行是在 hyperpod 上运行的,因此我们将使用该字符串来选择要查看的日志组。

i-00b3d8a1bf25714e4选择上面写着的那个SagemakerHyperPodTrainingJob/rig-group/[NODE]

-

使用 CloudWatch 见解

如果您手边有工作名称并且不想完成上述所有步骤,则只需查询下方的所有日志/aws/sagemaker/Clusters/my-hyperpod-rig/{UUID}即可找到单个日志。

CPT:

fields @timestamp, @message, @logStream, @log | filter @message like /(?i)Starting CPT Job/ | sort @timestamp desc | limit 100

要完成任务,请替换Starting CPT Job为 CPT Job

completed

然后你可以点击结果并选择一个写着 “Epoch 0” 的结果,因为那将是你的主节点。

使用 AmazonAmazon CLI

您可以选择使用 Amazon CLI 跟踪日志。在此之前,请使用检查您的 aws cli 版本aws --version。还建议使用此实用程序脚本来帮助在终端中进行实时日志跟踪

对于 V1:

aws logs get-log-events \ --log-group-name /aws/sagemaker/YourLogGroupName \ --log-stream-name YourLogStream \ --start-from-head | jq -r '.events[].message'

对于 V2:

aws logs tail /aws/sagemaker/YourLogGroupName \ --log-stream-name YourLogStream \ --since 10m \ --follow

列出活跃职位

查看集群中运行的所有作业:

hyperpod list-jobs -n kubeflow

输出示例:

{ "jobs": [ { "Name": "test-run-nhgza", "Namespace": "kubeflow", "CreationTime": "2025-10-29T16:50:57Z", "State": "Running" } ] }

取消作业

随时停止正在运行的作业:

hyperpod cancel-job --job-name <job-name> -n kubeflow

查找你的职位名称

选项 1:根据你的食谱

任务名称在你的食谱run块中指定:

run: name: "my-test-run" # This is your job name model_type: "amazon.nova-micro-v1:0:128k" ...

选项 2:来自 list-jobs 命令

使用hyperpod list-jobs -n kubeflow并复制输出中的Name字段。

运行评估作业

使用评估方法评估经过训练的模型或基础模型。

先决条件

在运行评估任务之前,请确保您已经:

-

检查训练作业

manifest.json文件中的 Amazon S3 URI(适用于经过训练的模型) -

以正确格式上传到 Amazon S3 的评估数据集

-

输出 Amazon S3 评估结果路径

命令

运行以下命令启动评估作业:

hyperpod start-job -n kubeflow \ --recipe evaluation/nova/nova_2_0/nova_lite/nova_lite_2_0_p5_48xl_gpu_bring_your_own_dataset_eval \ --override-parameters '{ "instance_type": "p5.48xlarge", "container": "708977205387.dkr.ecr.us-east-1.amazonaws.com/nova-evaluation-repo:SM-HP-Eval-latest", "recipes.run.name": "<your-eval-job-name>", "recipes.run.model_name_or_path": "<checkpoint-s3-uri>", "recipes.run.output_s3_path": "s3://<your-bucket>/eval-results/", "recipes.run.data_s3_path": "s3://<your-bucket>/eval-data.jsonl" }'

参数描述:

-

recipes.run.name: 您的评估任务的唯一名称 -

recipes.run.model_name_or_path: Amazon S3 URI 来自manifest.json或基本模型路径(例如nova-micro/prod) -

recipes.run.output_s3_path: Amazon S3 评估结果存放地点 -

recipes.run.data_s3_path: 您的评估数据集的 Amazon S3 位置

小贴士:

-

特定型号的配方:每种型号尺寸(微型、精简版、专业版)都有自己的评估方案

-

基础模型评估:使用基础模型路径(例如

nova-micro/prod)而不是检查点 URIs 来评估基础模型

评估数据格式

输入格式 (JSONL):

{ "metadata": "{key:4, category:'apple'}", "system": "arithmetic-patterns, please answer the following with no other words: ", "query": "What is the next number in this series? 1, 2, 4, 8, 16, ?", "response": "32" }

输出格式:

{ "prompt": "[{'role': 'system', 'content': 'arithmetic-patterns, please answer the following with no other words: '}, {'role': 'user', 'content': 'What is the next number in this series? 1, 2, 4, 8, 16, ?'}]", "inference": "['32']", "gold": "32", "metadata": "{key:4, category:'apple'}" }

字段描述:

-

prompt: 发送给模型的格式化输入 -

inference: 模型生成的响应 -

gold: 来自输入数据集的预期正确答案 -

metadata: 从输入传递的可选元数据

常见问题

-

ModuleNotFoundError: No module named 'nemo_launcher',你可能需要根据安装位置nemo_launcher添加到你的 python 路hyperpod_cli径中。命令示例:export PYTHONPATH=<path_to_hyperpod_cli>/sagemaker-hyperpod-cli/src/hyperpod_cli/sagemaker_hyperpod_recipes/launcher/nemo/nemo_framework_launcher/launcher_scripts:$PYTHONPATH -

FileNotFoundError: [Errno 2] No such file or directory: '/tmp/hyperpod_current_context.json'表示你错过了运行 hyperpod 连接集群命令的机会。 -

如果您没有看到已安排的作业,请仔细检查您的 HyperPod CLI 输出中是否包含此部分,其中包含作业名称和其他元数据。如果没有,请运行以下命令重新安装 helm chart:

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 chmod 700 get_helm.sh ./get_helm.sh rm -f ./get_helm.sh