Managing user pool token expiration and caching

Your app must successfully complete one of the following requests each time you want to get a new JSON Web Token (JWT).

-

Request a client credentials or authorization code grant

from the Token endpoint. -

Request an implicit grant from your managed login pages.

-

Authenticate a local user in an Amazon Cognito API request like InitiateAuth.

You can configure your user pool to set tokens to expire in minutes, hours, or days. To ensure the performance and availability of your app, use Amazon Cognito tokens for about 75% of the token lifetime, and only then retrieve new tokens. A cache solution that you build for your app keeps tokens available, and prevents the rejection of requests by Amazon Cognito when your request rate is too high. A client-side app must store tokens in a memory cache. A server-side app can add an encrypted cache mechanism to store tokens.

When your user pool generates a high volume of user or machine-to-machine activity, you might encounter the limits that Amazon Cognito sets on the number of requests for tokens that you can make. To reduce the number of requests you make to Amazon Cognito endpoints, you can either securely store and reuse authentication data, or implement exponential backoff and retries.

Authentication data comes from two classes of endpoints. Amazon Cognito OAuth

2.0 endpoints include the token endpoint, which services client credentials and

managed login authorization code requests. Service endpoints answer user pools API requests like InitiateAuth and

RespondToAuthChallenge. Each type of request has its own limit. For more

information about limits, see Quotas in Amazon Cognito.

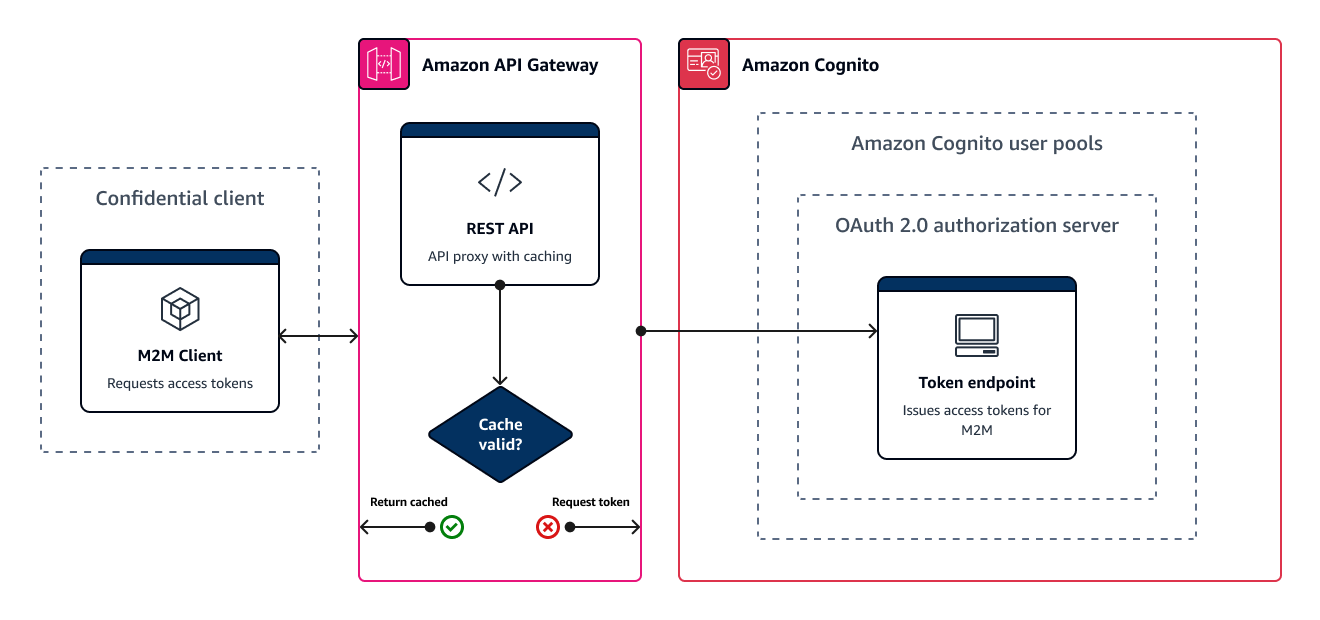

Caching machine-to-machine access tokens with Amazon API Gateway

With API Gateway token caching, your app can scale in response to events larger than the default request rate quota of Amazon Cognito OAuth endpoints.

You can cache the access tokens so that your app only requests a new access token if a cached token is expired. Otherwise, your caching endpoint returns a token from the cache. This prevents an additional call to an Amazon Cognito API endpoint. When you use Amazon API Gateway as a proxy to the Token endpoint, your API responds to the majority of requests that would otherwise contribute to your request quota, avoiding unsuccessful requests as a result of rate limiting.

The following API Gateway-based solution offers a low-latency, low-code/no-code implementation

of token caching. API Gateway APIs are encrypted in transit, and optionally at rest. An API Gateway

cache is ideal for the OAuth 2.0 client credentials

grant

In this solution, you define a cache in your API to store a separate access token for each combination of OAuth scopes and app client that you want to request in your app. When your app makes a request that matches the cache key, your API responds with an access token that Amazon Cognito issued to the first request that matched the cache key. When your cache key duration expires, your API forwards the request to your token endpoint and caches a new access token.

Note

Your cache key duration must be shorter than the access token duration of your app client.

The cache key is a combination of the OAuth scopes that you request in the

scope parameter in the request body and the Authorization header

in the request. The Authorization header contains your app client ID and client

secret. You don't need to implement additional logic in your app to implement this solution.

You must only update your configuration to change the path to your user pool token

endpoint.

You can also implement token caching with ElastiCache (Redis OSS). For fine-grained control with Amazon Identity and Access Management (IAM) policies, consider an Amazon DynamoDB cache.

Note

Caching in API Gateway is subject to additional cost. See pricing for more

details.

To set up a caching proxy with API Gateway

-

Open the API Gateway console

and create a REST API. -

In Resources, create a POST method.

-

Choose the HTTP Integration type.

-

Select Use HTTP proxy integration.

-

Enter an Endpoint URL of

https://.<your user pool domain>/oauth2/token

-

-

In Resources, configure the cache key.

-

Edit the Method request of your POST method.

Note

This method request validation is for use with

client_secret_basicauthorization in token requests, where the client secret is encoded in theAuthorizationrequest header. For validation of the JSON request body inclient_secret_postauthorization, create instead a data model that requires that client_secret be present. In this model, your Request validator should Validate body, query string parameters, and headers. -

Configure the method Request validator to Validate query string parameters and headers. For more information about request validation, see Request validation in the Amazon API Gateway Developer Guide.

-

Set your

scopeparameter andAuthorizationheader as your caching key.-

Add a query string to URL query string parameters. Enter a query string Name of

scopeand select Required and Caching. -

Add a header to HTTP request headers. Enter a request header Name of

Authorizationand select Required and Caching.

-

-

-

In Stages, configure caching.

-

Choose the stage that you want to modify and choose Edit from Stage Details.

-

Under Additional settings, Cache settings, turn on the Provision API cache option.

-

Choose a Cache capacity. Higher cache capacity improves performance but comes at additional cost.

-

Clear the Require authorization check box. Select Continue.

-

API Gateway only applies cache policies to GET methods from the stage level. You must apply a cache policy override to your POST method.

Expand the stage you configured and select the

POSTmethod. To create cache settings for the method, choose Create override. -

Activate the Enable method cache option.

-

Enter a Cache time-to-live (TTL) of 3600 seconds. Choose Save.

-

-

In Stages, note the Invoke URL.

-

Update your app to POST token requests to the Invoke URL of your API instead of the

/oauth2/tokenendpoint of your user pool.