Managing Python dependencies in requirements.txt

This topic describes how to install and manage Python dependencies in a

requirements.txt file for an Amazon Managed Workflows for Apache Airflow environment.

Testing DAGs using the Amazon MWAA CLI utility

-

The command line interface (CLI) utility replicates an Amazon Managed Workflows for Apache Airflow environment locally.

-

The CLI builds a Docker container image locally that’s similar to an Amazon MWAA production image. You can use this to run a local Apache Airflow environment to develop and test DAGs, custom plugins, and dependencies before deploying to Amazon MWAA.

-

To run the CLI, refer to aws-mwaa-docker-images

on GitHub.

Installing Python dependencies using PyPi.org Requirements File Format

The following section describes the different ways to install Python dependencies according to the PyPi.org Requirements File Format

Option one: Python dependencies from the Python Package Index

The following section describes how to specify Python dependencies from the Python Package Indexrequirements.txt file.

Option two: Python wheels (.whl)

A Python wheel is a package format designed to ship libraries with compiled artifacts. There are several benefits to wheel packages as a method to install dependencies in Amazon MWAA:

-

Faster installation – the WHL files are copied to the container as a single ZIP, and then installed locally, without having to download each one.

-

Fewer conflicts – You can determine version compatibility for your packages in advance. As a result, there is no need for

pipto recursively work out compatible versions. -

More resilience – With externally hosted libraries, downstream requirements can change, resulting in version incompatibility between containers on a Amazon MWAA environment. By not depending on an external source for dependencies, every container on has have the same libraries regardless of when the each container is instantiated.

We recommend the following methods to install Python dependencies from a Python wheel archive (.whl)

in your requirements.txt.

Methods

Using the plugins.zip file on an Amazon S3 bucket

The Apache Airflow scheduler, workers, and webserver (for Apache Airflow v2.2.2 and later) search for custom plugins during startup on the Amazon-managed Fargate container for your environment at

/usr/local/airflow/plugins/. This process begins prior to Amazon MWAA's *pip3 install -r requirements.txt for Python dependencies and Apache Airflow service startup.

A plugins.zip file can be used for any files that you don't want continuously changed during environment execution, or that you do not want to grant access to users that write DAGs.

For example, Python library wheel files, certificate PEM files, and configuration YAML files.

The following section describes how to install a wheel that's in the plugins.zip file on your Amazon S3 bucket.

-

Download the necessary WHL files You can use

pip downloadwith your existing requirements.txton the Amazon MWAA aws-mwaa-docker-imagesor another Amazon Linux 2 container to resolve and download the necessary Python wheel files. pip3 download -r "$AIRFLOW_HOME/dags/requirements.txt" -d "$AIRFLOW_HOME/plugins"cd "$AIRFLOW_HOME/plugins"zip "$AIRFLOW_HOME/plugins.zip" * -

Specify the path in your

requirements.txt. Specify the plugins directory at the top of your requirements.txt using--find-linksand instruct pipnot to install from other sources using--no-index, as listed in the following code: --find-links /usr/local/airflow/plugins --no-indexExample wheel in requirements.txt

The following example assumes you've uploaded the wheel in a

plugins.zipfile at the root of your Amazon S3 bucket. For example:--find-links /usr/local/airflow/plugins --no-index numpyAmazon MWAA fetches the

numpy-1.20.1-cp37-cp37m-manylinux1_x86_64.whlwheel from thepluginsfolder and installs it on your environment.

Using a WHL file hosted on a URL

The following section describes how to install a wheel that's hosted on a URL. The URL must either be publicly accessible, or accessible from within the custom Amazon VPC you specified for your Amazon MWAA environment.

-

Provide a URL. Provide the URL to a wheel in your

requirements.txt.Example wheel archive on a public URL

The following example downloads a wheel from a public site.

--find-links https://files.pythonhosted.org/packages/ --no-indexAmazon MWAA fetches the wheel from the URL you specified and installs them on your environment.

Note

URLs are not accessible from private webservers installing requirements in Amazon MWAA v2.2.2 and later.

Creating a WHL files from a DAG

If you have a private webserver using Apache Airflow v2.2.2 or later and you're unable to install requirements because your environment does not have access to external repositories, you can use the following DAG to take your existing Amazon MWAA requirements and package them on Amazon S3:

from airflow import DAG from airflow.operators.bash_operator import BashOperator from airflow.utils.dates import days_ago S3_BUCKET = 'my-s3-bucket' S3_KEY = 'backup/plugins_whl.zip' with DAG(dag_id="create_whl_file", schedule_interval=None, catchup=False, start_date=days_ago(1)) as dag: cli_command = BashOperator( task_id="bash_command", bash_command=f"mkdir /tmp/whls;pip3 download -r /usr/local/airflow/requirements/requirements.txt -d /tmp/whls;zip -j /tmp/plugins.zip /tmp/whls/*;aws s3 cp /tmp/plugins.zip s3://amzn-s3-demo-bucket/{S3_KEY}" )

After running the DAG, use this new file as your Amazon MWAA plugins.zip, optionally, packaged with other plugins. Then, update your requirements.txt preceded by

--find-links /usr/local/airflow/plugins and --no-index without adding --constraint.

This method you can use to use the same libraries offline.

Option three: Python dependencies hosted on a private PyPi/PEP-503 Compliant Repo

The following section describes how to install an Apache Airflow extra that's hosted on a private URL with authentication.

-

Add your user name and password as Apache Airflow configuration options. For example:

-

foo.user:YOUR_USER_NAME -

foo.pass:YOUR_PASSWORD

-

-

Create your

requirements.txtfile. Substitute the placeholders in the following example with your private URL, and the username and password you've added as Apache Airflow configuration options. For example:--index-url https://${AIRFLOW__FOO__USER}:${AIRFLOW__FOO__PASS}@my.privatepypi.com -

Add any additional libraries to your

requirements.txtfile. For example:--index-url https://${AIRFLOW__FOO__USER}:${AIRFLOW__FOO__PASS}@my.privatepypi.com my-private-package==1.2.3

Enabling logs on the Amazon MWAA console

The execution role for your Amazon MWAA environment needs permission to send logs to CloudWatch Logs. To update the permissions of an execution role, refer to Amazon MWAA execution role.

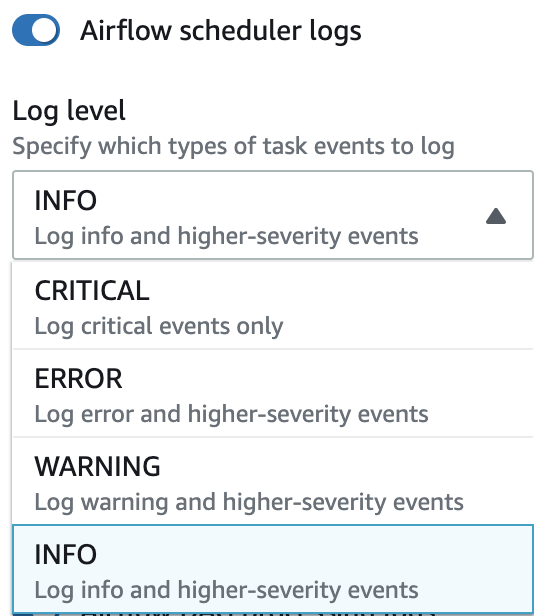

You can enable Apache Airflow logs at the INFO, WARNING, ERROR, or CRITICAL level. When you choose a log level, Amazon MWAA sends logs for that level and all higher levels of severity. For example, if you enable logs at the INFO level, Amazon MWAA sends INFO logs and WARNING, ERROR, and CRITICAL log levels to CloudWatch Logs. We recommend enabling Apache Airflow logs at the INFO level for the scheduler to access logs received for the requirements.txt.

Accessing logs on the CloudWatch Logs console

You can access Apache Airflow logs for the scheduler scheduling your workflows and parsing your dags folder. The following steps describe how to open the log group for the scheduler on the Amazon MWAA console, and access Apache Airflow logs on the CloudWatch Logs console.

To access logs for a requirements.txt

-

Open the Environments

page on the Amazon MWAA console. -

Choose an environment.

-

Choose the Airflow scheduler log group on the Monitoring pane.

-

Choose the

requirements_install_iplog in Log streams. -

Refer to the list of packages that were installed on the environment at

/usr/local/airflow/.local/bin. For example:Collecting appdirs==1.4.4 (from -r /usr/local/airflow/.local/bin (line 1)) Downloading https://files.pythonhosted.org/packages/3b/00/2344469e2084fb28kjdsfiuyweb47389789vxbmnbjhsdgf5463acd6cf5e3db69324/appdirs-1.4.4-py2.py3-none-any.whl Collecting astroid==2.4.2 (from -r /usr/local/airflow/.local/bin (line 2)) -

Review the list of packages and whether any of these encountered an error during installation. If something went wrong, you can get an error similar to the following:

2021-03-05T14:34:42.731-07:00 No matching distribution found for LibraryName==1.0.0 (from -r /usr/local/airflow/.local/bin (line 4)) No matching distribution found for LibraryName==1.0.0 (from -r /usr/local/airflow/.local/bin (line 4))

Accessing errors in the Apache Airflow UI

You can also check your Apache Airflow UI to identify whether an error is related to another issue. The most common error you can encounter with Apache Airflow on Amazon MWAA is:

Broken DAG: No module namedx

If you find this error in your Apache Airflow UI, you're likely missing a required dependency in your requirements.txt file.

Log in to Apache Airflow

You need Apache Airflow UI access policy: AmazonMWAAWebServerAccess permissions for your Amazon Web Services account in Amazon Identity and Access Management (IAM) to access your Apache Airflow UI.

To access your Apache Airflow UI

-

Open the Environments

page on the Amazon MWAA console. -

Choose an environment.

-

Choose Open Airflow UI.

Example requirements.txt scenarios

You can mix and match different formats in your requirements.txt. The following example uses a combination of the different ways to install extras.

Example Extras on PyPi.org and a public URL

You need to use the --index-url option when specifying packages from PyPi.org, in addition to packages on a public URL, such as custom PEP 503 compliant repo URLs.

aws-batch == 0.6 phoenix-letter >= 0.3 --index-url http://dist.repoze.org/zope2/2.10/simple zopelib