Amazon MWAA execution role

An execution role is an Amazon Identity and Access Management (IAM) role with a permissions policy that grants Amazon Managed Workflows for Apache Airflow permission to invoke the resources of other Amazon services on your behalf. This can include resources such as your Amazon S3 bucket, Amazon-owned key, and CloudWatch Logs. Amazon MWAA environments need one execution role per environment. This topic describes how to use and configure the execution role for your environment to allow Amazon MWAA to access other Amazon resources used by your environment.

Execution role overview

Permission for Amazon MWAA to use other Amazon services used by your environment comes from the execution role. An Amazon MWAA execution role needs permission to the following Amazon services used by an environment:

-

Amazon CloudWatch (CloudWatch) – to send Apache Airflow metrics and logs.

-

Amazon Simple Storage Service (Amazon S3) – to parse your environment's DAG code and supporting files (such as a

requirements.txt). -

Amazon Simple Queue Service (Amazon SQS) – to queue your environment's Apache Airflow tasks in an Amazon SQS queue owned by Amazon MWAA.

-

Amazon Key Management Service (Amazon KMS) – for your environment's data encryption (using either an Amazon-owned key or your Customer-managed key).

Note

If you have elected for Amazon MWAA to use an Amazon owned KMS key to encrypt your data, then you must define permissions in a policy attached to your Amazon MWAA execution role that grant access to arbitrary KMS keys stored outside of your account through Amazon SQS. The following two conditions are required in order for your environment's execution role to access arbitrary KMS keys:

-

A KMS key in a third-party account needs to allow this cross account access through its resource policy.

-

Your DAG code needs to access an Amazon SQS queue that starts with

airflow-celery-in the third-party account and uses the same KMS key for encryption.

To mitigate the risks associated with cross-account access to resources, we recommend reviewing the code placed in your DAGs to ensure that your workflows are not accessing arbitrary Amazon SQS queues outside your account. Furthermore, you can use a customer-managed KMS key stored in your own account to manage encryption on Amazon MWAA. This limits your environment's execution role to access only the KMS key in your account.

Keep in mind that after you choose an encryption option, you cannot change your selection for an existing environment.

-

An execution role also needs permission to the following IAM actions:

-

airflow:PublishMetrics– to allow Amazon MWAA to monitor the health of an environment.

Permissions attached by default

You can use the default options on the Amazon MWAA console to create an execution role and an Amazon-owned key, then use the steps on this page to add permission policies to your execution role.

-

When you choose the Create new role option on the console, Amazon MWAA attaches the minimal permissions needed by an environment to your execution role.

-

In some cases, Amazon MWAA attaches the maximum permissions. For example, we recommend choosing the option on the Amazon MWAA console to create an execution role when you create an environment. .

How to add permission to use other Amazon services

Amazon MWAA can't add or edit permission policies to an existing execution role after an environment is created. You must update your execution role with additional permission policies needed by your environment. For example, if your DAG requires access to Amazon Glue, Amazon MWAA can't automatically detect these permissions are required by your environment, or add the permissions to your execution role.

You can add permissions to an execution role in two ways:

-

By modifying the JSON policy for your execution role inline. You can use the sample JSON policy documents on this page to either add to or replace the JSON policy of your execution role on the IAM console.

-

By creating a JSON policy for an Amazon service and attaching it to your execution role. You can use the steps on this page to associate a new JSON policy document for an Amazon service to your execution role on the IAM console.

Assuming the execution role is already associated to your environment, Amazon MWAA can start using the added permission policies immediately. This also means if you remove any required permissions from an execution role, your DAGs might fail.

How to associate a new execution role

You can change the execution role for your environment at any time. If a new execution role is not already associated with your environment, use the steps on this page to create a new execution role policy, and associate the role to your environment.

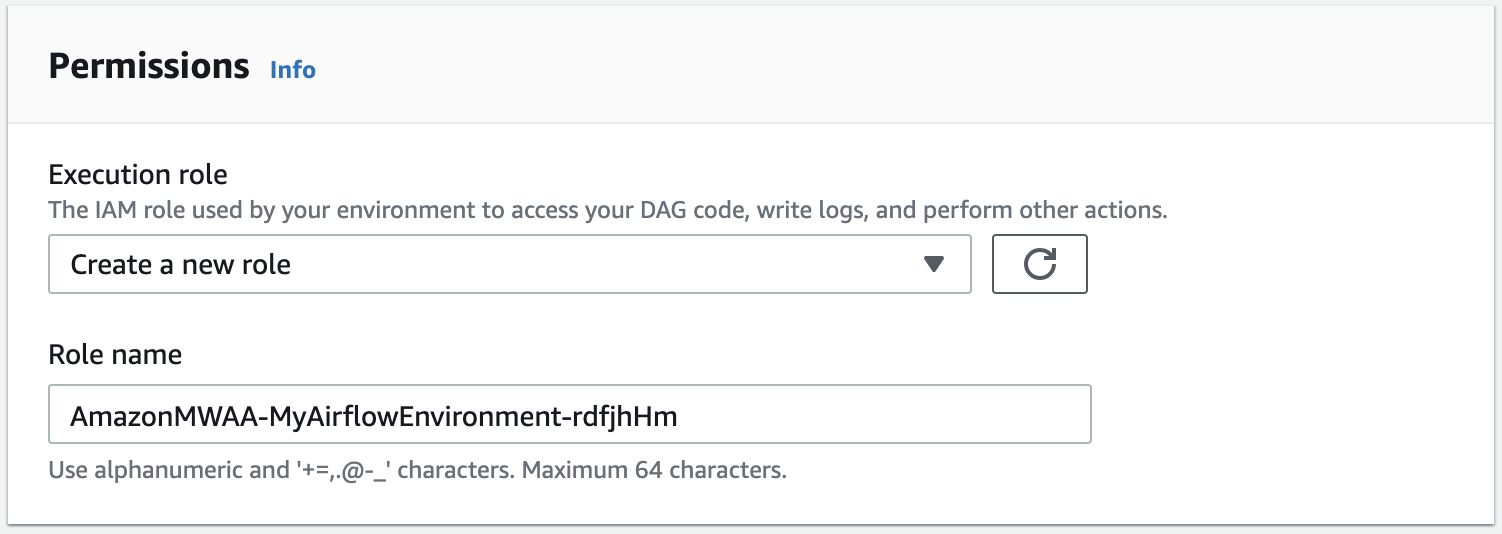

Create a new role

By default, Amazon MWAA creates an Amazon-owned key for data encryption and an execution role on your behalf. You can choose the default options on the Amazon MWAA console when you create an environment. The following image displays the default option to create an execution role for an environment.

Important

When you create a new execution role, do not reuse the name of a deleted execution role. Unique names can help prevent conflicts and ensure proper resource management.

Access and update an execution role policy

You can access the execution role for your environment on the Amazon MWAA console, and update the JSON policy for the role on the IAM console.

To update an execution role policy

-

Open the Environments

page on the Amazon MWAA console. -

Choose an environment.

-

Choose the execution role on the Permissions pane to open the permissions page in IAM.

-

Choose the execution role name to open the permissions policy.

-

Choose Edit policy.

-

Choose the JSON tab.

-

Update your JSON policy.

-

Choose Review policy.

-

Choose Save changes.

Attach a JSON policy to use other Amazon services

You can create a JSON policy for an Amazon service and attach it to your execution role. For example, you can attach the following JSON policy to grant read-only access to all resources in Amazon Secrets Manager.

To attach a policy to your execution role

-

Open the Environments

page on the Amazon MWAA console. -

Choose an environment.

-

Choose your execution role on the Permissions pane.

-

Choose Attach policies.

-

Choose Create policy.

-

Choose JSON.

-

Paste the JSON policy.

-

Choose Next: Tags, Next: Review.

-

Enter a descriptive name (such as

SecretsManagerReadPolicy) and a description for the policy. -

Choose Create policy.

Grant access to Amazon S3 bucket with account-level public access block

You might want to block access to all buckets in your account by using the PutPublicAccessBlock Amazon S3 operation.

When you block access to all buckets in your account, your environment execution role must include the s3:GetAccountPublicAccessBlock action in a permission policy.

The following example demonstrates the policy you must attach to your execution role when blocking access to all Amazon S3 buckets in your account.

For more information about restricting access to your Amazon S3 buckets, refer to Blocking public access to your Amazon S3 storage in the Amazon Simple Storage Service User Guide.

Use Apache Airflow connections

You can also create an Apache Airflow connection and specify your execution role and its ARN in your Apache Airflow connection object. To learn more, refer to Managing connections to Apache Airflow.

Sample JSON policies for an execution role

You can use the two sample permission policies in this section to replace the permissions policy used for your existing execution role, or to create a new execution role and use for your environment. These policies contain Resource ARN placeholders for Apache Airflow log groups, an Amazon S3 bucket, and an Amazon MWAA environment.

We recommend copying the example policy, replacing the sample ARNs or placeholders, then using the JSON policy to create or update an execution role.

Sample policy for a customer-managed key

The following example presents an execution role policy you can use for an Customer-managed key.

Next, you need to allow Amazon MWAA to assume this role to perform actions on your behalf. This can be done by adding "airflow.amazonaws.com" and "airflow-env.amazonaws.com" service principals to the list of trusted entities for this execution role using the IAM console, or by placing these service principals in the assume role policy document for this execution role through the IAM create-role

Then attach the following JSON policy to your Customer-managed key. This policy uses the kms:EncryptionContext condition key prefix to permit access to your Apache Airflow logs group in CloudWatch Logs.

{ "Sid": "Allow logs access", "Effect": "Allow", "Principal": { "Service": "logs.us-east-1.amazonaws.com" }, "Action": [ "kms:Encrypt*", "kms:Decrypt*", "kms:ReEncrypt*", "kms:GenerateDataKey*", "kms:Describe*" ], "Resource": "*", "Condition": { "ArnLike": {"kms:EncryptionContext:aws:logs:arn": "arn:aws-cn:logs:} } }us-east-1:111122223333:*"

Sample policy for an Amazon-owned key

The following example presents an execution role policy you can use for an Amazon-owned key.

What's next?

-

Learn about the required permissions you and your Apache Airflow users need to access your environment in Accessing an Amazon MWAA environment.

-

Learn about Using customer-managed keys for encryption.

-

Explore more Customer-managed policy examples.