End of support notice: On October 7th, 2026, Amazon will discontinue support for Amazon IoT Greengrass Version 1. After October 7th, 2026, you will no longer be able to access the Amazon IoT Greengrass V1 resources. For more information, please visit Migrate from Amazon IoT Greengrass Version 1.

Optional: Configuring your device for ML qualification

IDT for Amazon IoT Greengrass provides machine learning (ML) qualification tests to validate that your devices can perform ML inference locally using cloud-trained models.

To run ML qualification tests, you must first configure your devices as described in Configure your device to run IDT tests. Then, follow the steps in this topic to install dependencies for the ML frameworks that you want to run.

IDT v3.1.0 or later is required to run tests for ML qualification.

Installing ML framework dependencies

All ML framework dependencies must be installed under the /usr/local/lib/python3.x/site-packages directory. To make sure they are installed under the correct directory, we recommend

that you use sudo root permissions when installing the dependencies. Virtual environments are not supported for qualification tests.

Note

If you're testing Lambda functions that run with containerization (in Greengrass container mode), creating symlinks

for Python libraries under /usr/local/lib/python3.x isn't supported. To avoid errors, you must install the dependencies under the correct directory.

Follow the steps to install the dependencies for your target framework:

Install Apache MXNet dependencies

IDT qualification tests for this framework have the following dependencies:

-

Python 3.6 or Python 3.7.

Note

If you're using Python 3.6, you must create a symbolic link from Python 3.7 to Python 3.6 binaries. This configures your device to meet the Python requirement for Amazon IoT Greengrass. For example:

sudo ln -spath-to-python-3.6/python3.6path-to-python-3.7/python3.7 -

Apache MXNet v1.2.1 or later.

-

NumPy. The version must be compatible with your MXNet version.

Installing MXNet

Follow the instructions in the MXNet documentation to install

MXNet

Note

If Python 2.x and Python 3.x are both installed on your device, use Python 3.x in the commands that you run to install the dependencies.

Validating the MXNet installation

Choose one of the following options to validate the MXNet installation.

Option 1: SSH into your device and run scripts

-

SSH into your device.

-

Run the following scripts to verify that the dependencies are correctly installed.

sudo python3.7 -c "import mxnet; print(mxnet.__version__)"sudo python3.7 -c "import numpy; print(numpy.__version__)"The output prints the version number and the script should exit without error.

Option 2: Run the IDT dependency test

-

Make sure that

device.jsonis configured for ML qualification. For more information, see Configure device.json for ML qualification. -

Run the dependencies test for the framework.

devicetester_[linux | mac | win_x86-64]run-suite --group-id mldependencies --test-id mxnet_dependency_checkThe test summary displays a

PASSEDresult formldependencies.

Install TensorFlow dependencies

IDT qualification tests for this framework have the following dependencies:

-

Python 3.6 or Python 3.7.

Note

If you're using Python 3.6, you must create a symbolic link from Python 3.7 to Python 3.6 binaries. This configures your device to meet the Python requirement for Amazon IoT Greengrass. For example:

sudo ln -spath-to-python-3.6/python3.6path-to-python-3.7/python3.7 -

TensorFlow 1.x.

Installing TensorFlow

Follow the instructions in the TensorFlow documentation to install TensorFlow 1.x

with pip

Note

If Python 2.x and Python 3.x are both installed on your device, use Python 3.x in the commands that you run to install the dependencies.

Validating the TensorFlow installation

Choose one of the following options to validate the TensorFlow installation.

Option 1: SSH into your device and run a script

-

SSH into your device.

-

Run the following script to verify that the dependency is correctly installed.

sudo python3.7 -c "import tensorflow; print(tensorflow.__version__)"The output prints the version number and the script should exit without error.

Option 2: Run the IDT dependency test

-

Make sure that

device.jsonis configured for ML qualification. For more information, see Configure device.json for ML qualification. -

Run the dependencies test for the framework.

devicetester_[linux | mac | win_x86-64]run-suite --group-id mldependencies --test-id tensorflow_dependency_checkThe test summary displays a

PASSEDresult formldependencies.

Install Amazon SageMaker AI Neo Deep Learning Runtime (DLR) dependencies

IDT qualification tests for this framework have the following dependencies:

-

Python 3.6 or Python 3.7.

Note

If you're using Python 3.6, you must create a symbolic link from Python 3.7 to Python 3.6 binaries. This configures your device to meet the Python requirement for Amazon IoT Greengrass. For example:

sudo ln -spath-to-python-3.6/python3.6path-to-python-3.7/python3.7 -

SageMaker AI Neo DLR.

-

numpy.

After you install the DLR test dependencies, you must compile the model.

Installing DLR

Follow the instructions in the DLR documentation to install the Neo DLR

Note

If Python 2.x and Python 3.x are both installed on your device, use Python 3.x in the commands that you run to install the dependencies.

Validating the DLR installation

Choose one of the following options to validate the DLR installation.

Option 1: SSH into your device and run scripts

-

SSH into your device.

-

Run the following scripts to verify that the dependencies are correctly installed.

sudo python3.7 -c "import dlr; print(dlr.__version__)"sudo python3.7 -c "import numpy; print(numpy.__version__)"The output prints the version number and the script should exit without error.

Option 2: Run the IDT dependency test

-

Make sure that

device.jsonis configured for ML qualification. For more information, see Configure device.json for ML qualification. -

Run the dependencies test for the framework.

devicetester_[linux | mac | win_x86-64]run-suite --group-id mldependencies --test-id dlr_dependency_checkThe test summary displays a

PASSEDresult formldependencies.

Compile the DLR model

You must compile the DLR model before you can use it for ML qualification tests. For steps, choose one of the following options.

Option 1: Use Amazon SageMaker AI to compile the model

Follow these steps to use SageMaker AI to compile the ML model provided by IDT. This model is pretrained with Apache MXNet.

-

Verify that your device type is supported by SageMaker AI. For more information, see the target device options the Amazon SageMaker AI API Reference. If your device type is not currently supported by SageMaker AI, follow the steps in Option 2: Use TVM to compile the DLR model.

Note

Running the DLR test with a model compiled by SageMaker AI might take 4 or 5 minutes. Don’t stop IDT during this time.

-

Download the tarball file that contains the uncompiled, pretrained MXNet model for DLR:

-

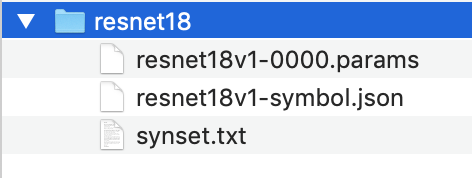

Decompress the tarball. This command generates the following directory structure.

-

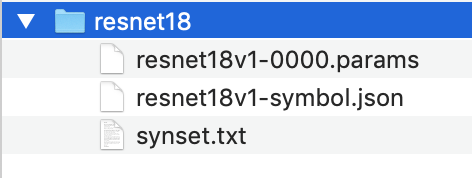

Move

synset.txtout of theresnet18directory. Make a note of the new location. You copy this file to compiled model directory later. -

Compress the contents of the

resnet18directory.tar cvfz model.tar.gz resnet18v1-symbol.json resnet18v1-0000.params -

Upload the compressed file to an Amazon S3 bucket in your Amazon Web Services account, and then follow the steps in Compile a Model (Console) to create a compilation job.

-

For Input configuration, use the following values:

-

For Data input configuration, enter

{"data": [1, 3, 224, 224]}. -

For Machine learning framework, choose

MXNet.

-

-

For Output configuration, use the following values:

-

For S3 Output location, enter the path to the Amazon S3 bucket or folder where you want to store the compiled model.

-

For Target device, choose your device type.

-

-

-

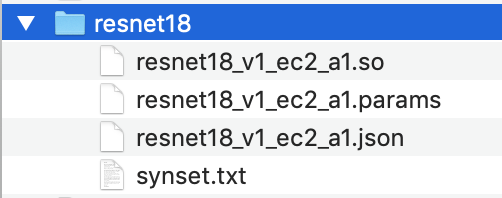

Download the compiled model from the output location you specified, and then unzip the file.

-

Copy

synset.txtinto the compiled model directory. -

Change the name of the compiled model directory to

resnet18.Your compiled model directory must have the following directory structure.

Option 2: Use TVM to compile the DLR model

Follow these steps to use TVM to compile the ML model provided by IDT. This model is pretrained with Apache MXNet, so you must install MXNet on the computer or

device where you compile the model. To install MXNet, follow the instructions in the

MXNet documentation

Note

We recommend that you compile the model on your target device. This practice is optional, but it can help ensure compatibility and mitigate potential issues.

-

Download the tarball file that contains the uncompiled, pretrained MXNet model for DLR:

-

Decompress the tarball. This command generates the following directory structure.

-

Follow the instructions in the TVM documentation to build and install TVM from source for your platform

. -

After TVM is built, run the TVM compilation for the resnet18 model. The following steps are based on Quick Start Tutorial for Compiling Deep Learning Models

in the TVM documentation. -

Open the

relay_quick_start.pyfile from the cloned TVM repository. -

Update the code that defines a neural network in relay

. You can use one of following options: -

Option 1: Use

mxnet.gluon.model_zoo.vision.get_modelto get the relay module and parameters:from mxnet.gluon.model_zoo.vision import get_model block = get_model('resnet18_v1', pretrained=True) mod, params = relay.frontend.from_mxnet(block, {"data": data_shape}) -

Option 2: From the uncompiled model that you downloaded in step 1, copy the following files to the same directory as the

relay_quick_start.pyfile. These files contain the relay module and parameters.-

resnet18v1-symbol.json -

resnet18v1-0000.params

-

-

-

Update the code that saves and loads the compiled module

to use the following code. from tvm.contrib import util path_lib = "deploy_lib.so" # Export the model library based on your device architecture lib.export_library("deploy_lib.so", cc="aarch64-linux-gnu-g++") with open("deploy_graph.json", "w") as fo: fo.write(graph) with open("deploy_param.params", "wb") as fo: fo.write(relay.save_param_dict(params)) -

Build the model:

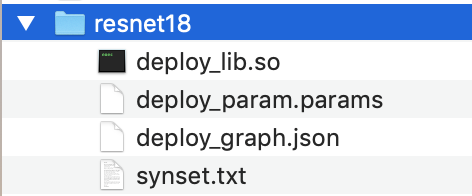

python3 tutorials/relay_quick_start.py --build-dir ./modelThis command generates the following files.

-

deploy_graph.json -

deploy_lib.so -

deploy_param.params

-

-

-

Copy the generated model files into a directory named

resnet18. This is your compiled model directory. -

Copy the compiled model directory to your host computer. Then copy

synset.txtfrom the uncompiled model that you downloaded in step 1 into the compiled model directory.Your compiled model directory must have the following directory structure.

Next, configure your Amazon credentials and device.json file.